Parameter evaluation

Here I present the results of the sensitivity analysis.

Libraries

Packages needed for the analysis are loaded. If the libraries do not exist locally, they will be downloaded.

list.of.packages <-

c(

"DescTools",

"tidyverse",

"readxl",

"data.table",

"knitr",

"zoo",

"imputeTS",

"ggthemes",

"rcompanion",

"mgsub",

"R.utils",

"here",

"stringr",

"pander",

"egg",

"rsm",

"ggrepel",

"cowplot",

"viridis",

"kableExtra"

)

new.packages <-

list.of.packages[!(list.of.packages %in% installed.packages()[, "Package"])]

#Download packages that are not already present in the library

if (length(new.packages))

install.packages(new.packages, force = TRUE, dependencies = TRUE)

packages_load <-

lapply(list.of.packages, require, character.only = TRUE)

#Print warning if there is a problem with installing/loading some of packages

if (any(as.numeric(packages_load) == 0)) {

warning(paste("Package/s: ", paste(list.of.packages[packages_load != TRUE], sep = ", "), "not loaded!"))

} else {

print("All packages were successfully loaded.")

}## [1] "All packages were successfully loaded."rm(list.of.packages, new.packages, packages_load)Leaf Wetness Estimation

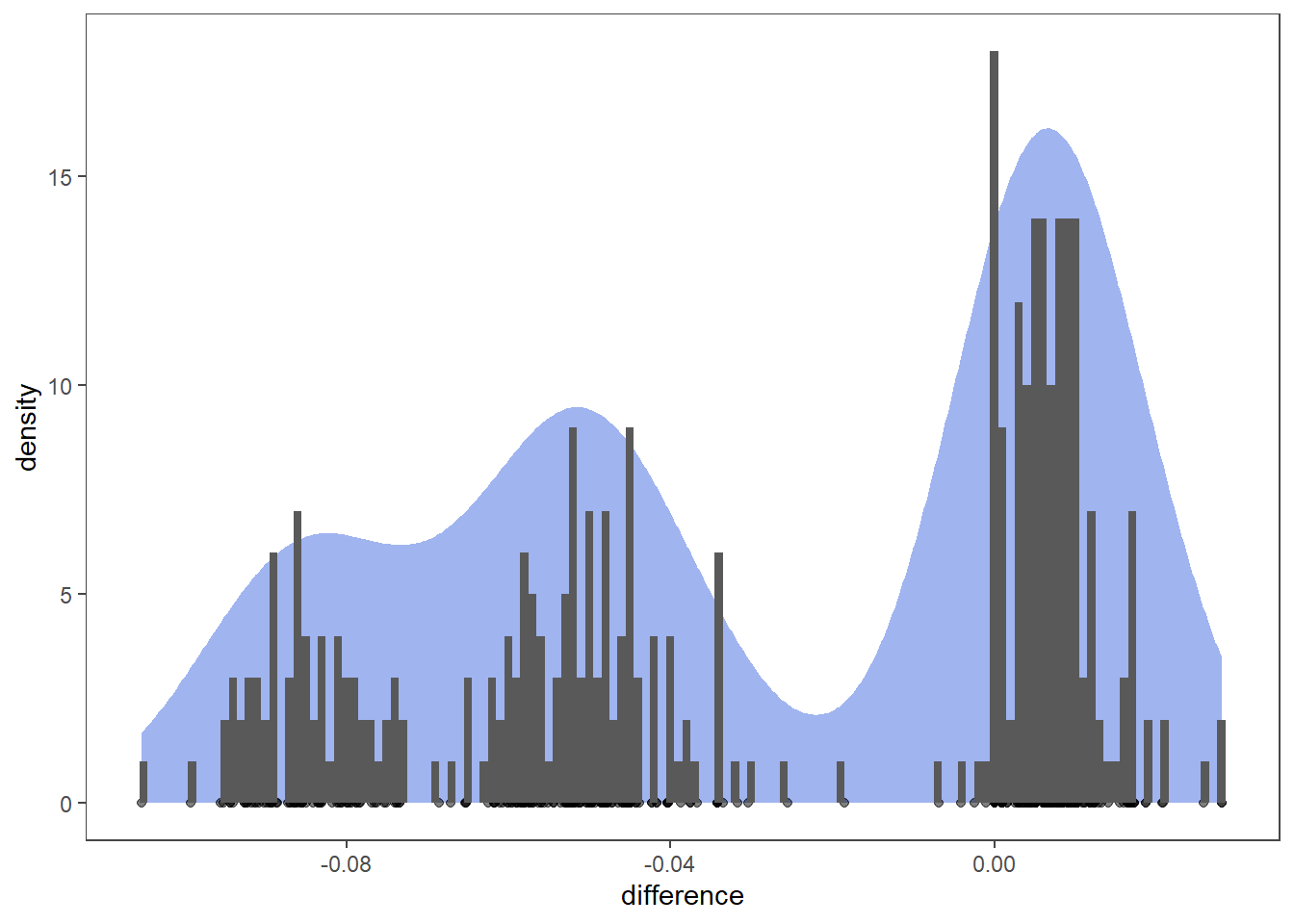

Differences between pairs of models with same parameters, differing only in leaf wetness estimation. We shall consult visual aids to assess if distribution of differences follows normal distribution.

# load the data

load( file = here::here("data", "op_2007_16", "auc_data.RData"))

t_data <-

auc_data %>%

group_by(rh_thresh, temp_thresh, hours) %>%

unite(var, rh_thresh, temp_thresh, hours) %>%

spread(key = lw_rh, value = auc) %>%

mutate(difference = rain - rainrh)

ggplot(t_data, aes(x = difference)) +

geom_density(fill = "royalblue",

alpha = 0.5,

color = NA) +

geom_point(aes(y = 0),

alpha = 0.5) +

geom_histogram(binwidth = 0.001) +

theme_article()

Compute summary statistics by groups.

group_by(auc_data, lw_rh) %>%

summarise(

count = n(),

median = median(auc, na.rm = TRUE),

IQR = IQR(auc, na.rm = TRUE)

) %>%

kable(format = "html") %>%

kableExtra::kable_styling( latex_options = "striped",full_width = FALSE)| lw_rh | count | median | IQR |

|---|---|---|---|

| rain | 343 | 0.6953608 | 0.0601804 |

| rainrh | 343 | 0.7345361 | 0.0902706 |

shapiro.test(t_data$difference)##

## Shapiro-Wilk normality test

##

## data: t_data$difference

## W = 0.8718, p-value = 2.877e-16The p-value < 0.05 implying that the distribution of the data is significantly different from normal distribution.

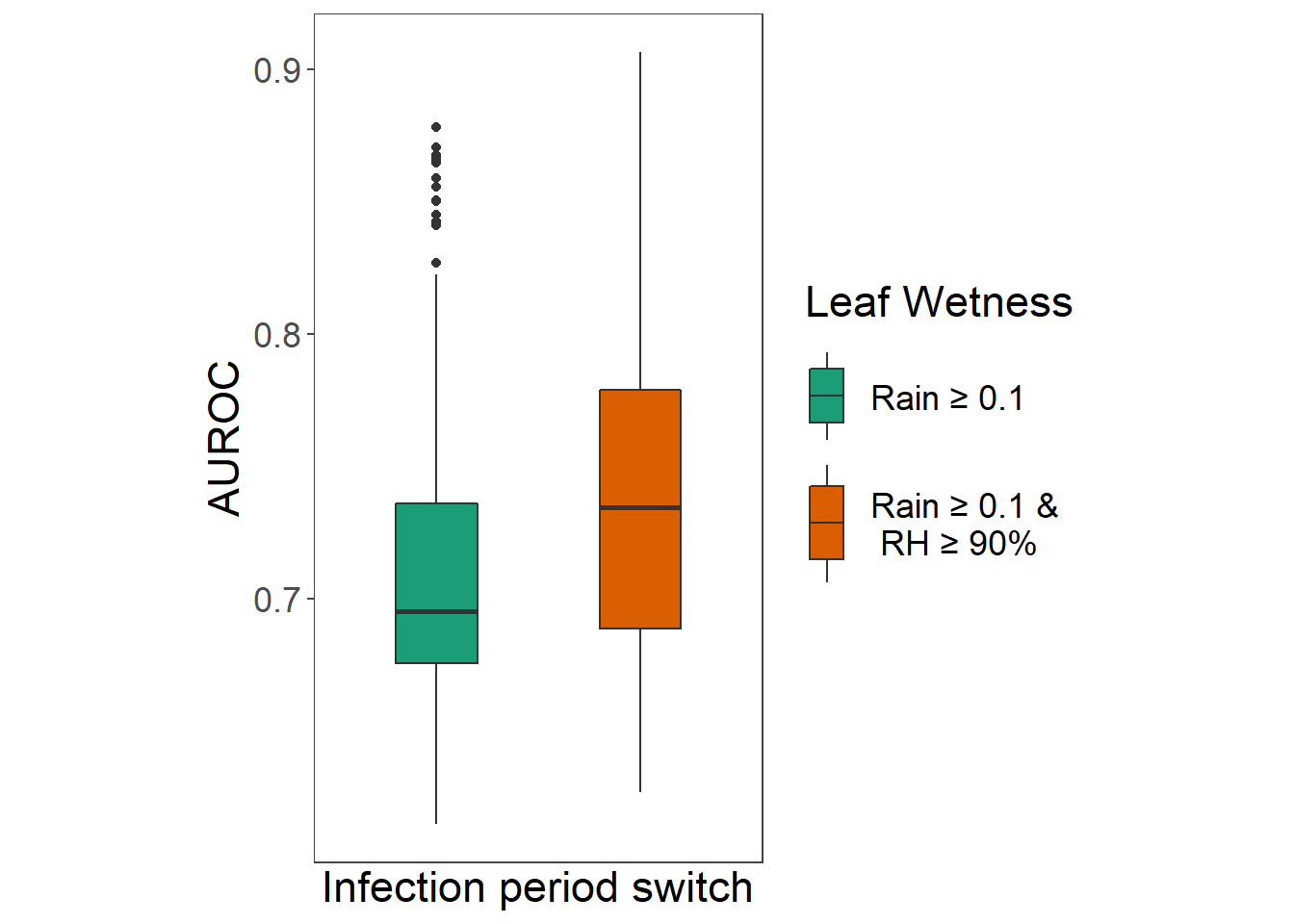

Use paired-sample Wilcoxon test to determine if median AUROC with rain as LW estimator is as good as the median auc with both rain and RH as estimators.

w.test <- with(t_data,

wilcox.test(

rain,

rainrh,

paired = TRUE,

exact = F,

alternative = "less"

))

w.test##

## Wilcoxon signed rank test with continuity correction

##

## data: rain and rainrh

## V = 11211, p-value < 2.2e-16

## alternative hypothesis: true location shift is less than 0Wilcoxon signed rank test showed that median AUROC is greater when using both rain >0.1 mm and RH>90% instead only rain >0.1 mm and as leaf wetness indicators with p < 0.001. Model outputs with rain as single predictor are removed from further analysis.

auc_data %>%

mutate(Leaf_Wetness = factor(ifelse(

lw_rh == "rainrh", "rain and rh", "only rain"

))) %>%

ggplot(aes(

x = factor(lw_rh),

y = auc,

group = Leaf_Wetness

)) +

geom_boxplot(aes(fill = Leaf_Wetness),

width = 0.4) +

scale_fill_brewer(

palette = "Dark2",

name = "Leaf Wetness",

labels = c(paste0("\nRain ","\u2265"," 0.1\n"), paste0("\nRain ","\u2265"," 0.1 &\n RH ","\u2265"," 90%\n"))

) +

scale_x_discrete(breaks = c(0, 90),

labels = c("rain", "rh and rain")) +

xlab("Infection period switch") +

ylab("AUROC") +

theme_article() +

theme(text = element_text(size=17))+

coord_equal(13 / 1)

auc_data <-

auc_data %>%

filter(lw_rh == "rainrh") %>%

select(-lw_rh)T, RH and sporulation duration

Initial exploration

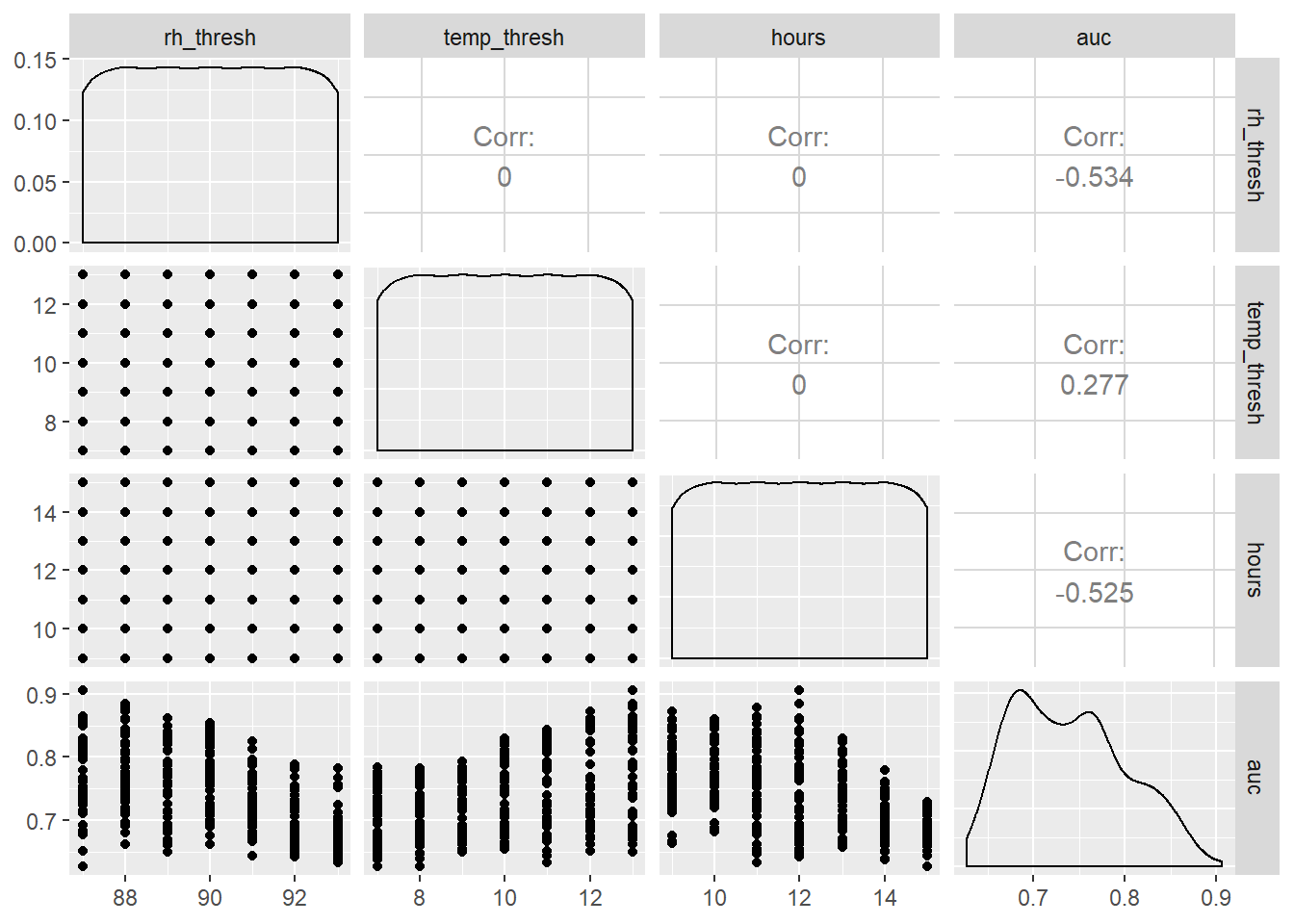

Scatter plot matrix shows some relationships between y and other variables.

GGally::ggpairs(auc_data,

lower = list(continuous = "points"),

upper = list(continuous = "cor"))

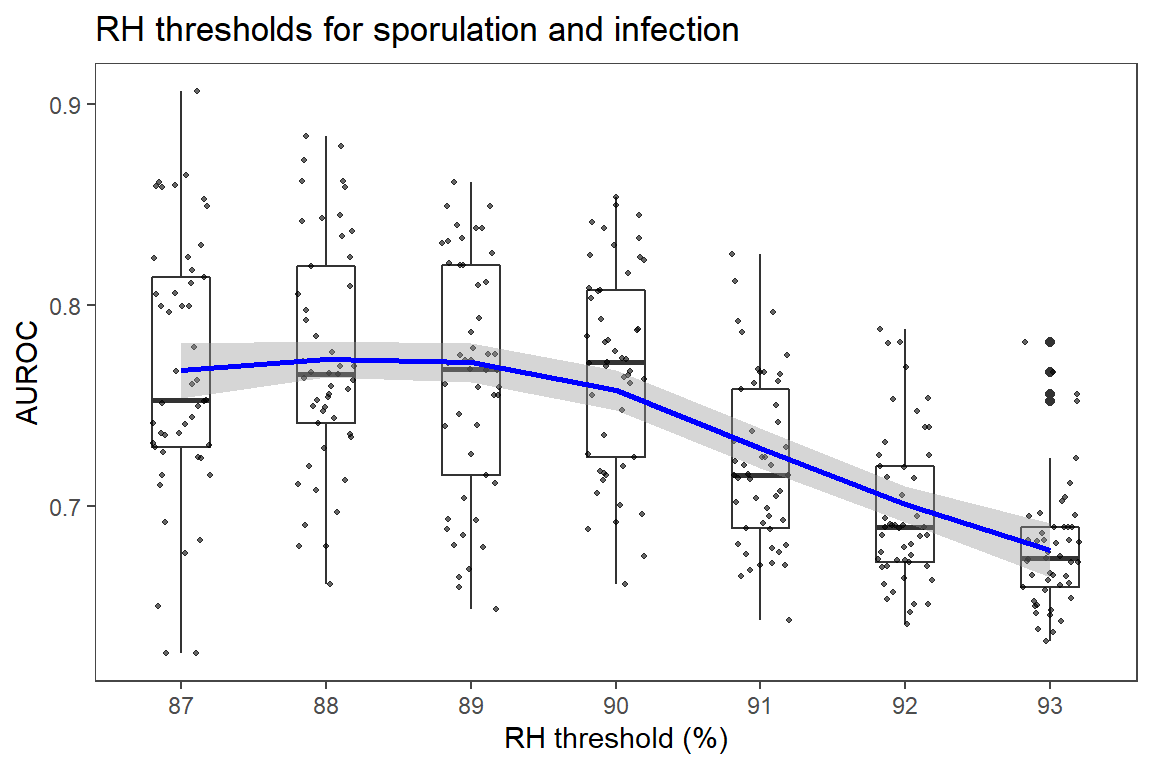

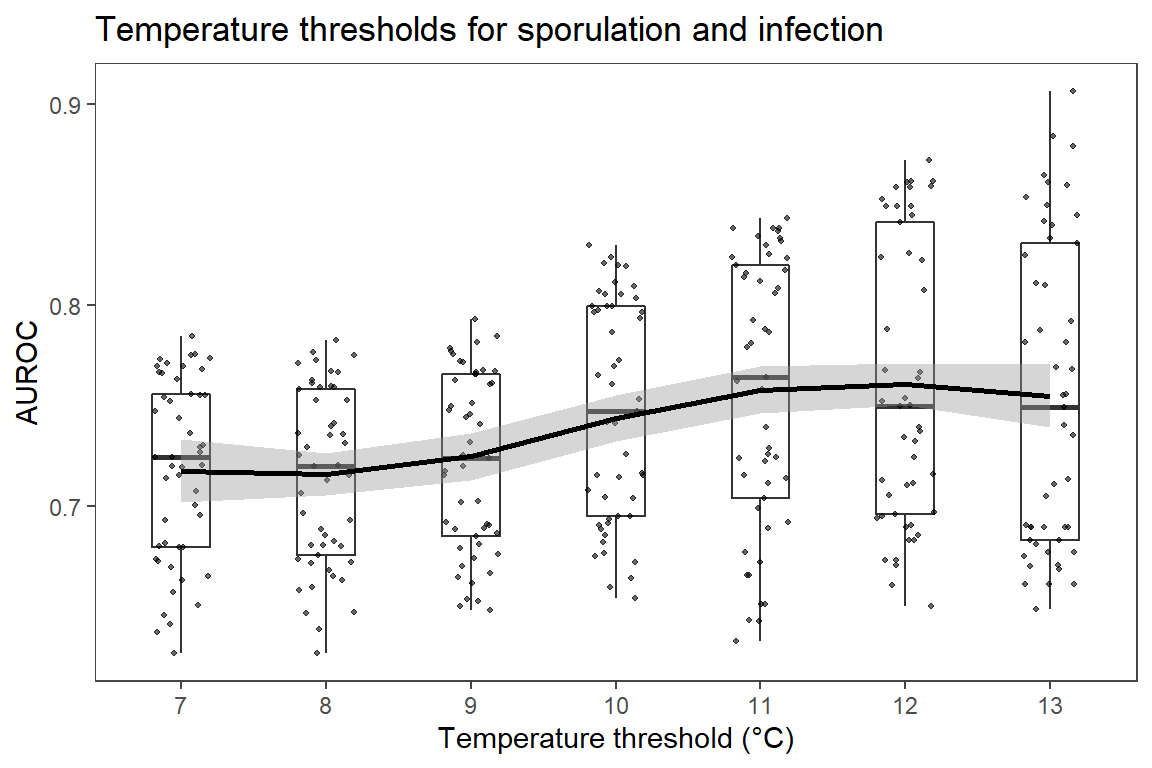

Increasing temperature threshold had positive, while reducing RH and duration of sporulation threshold had negative correlation with AUROC.

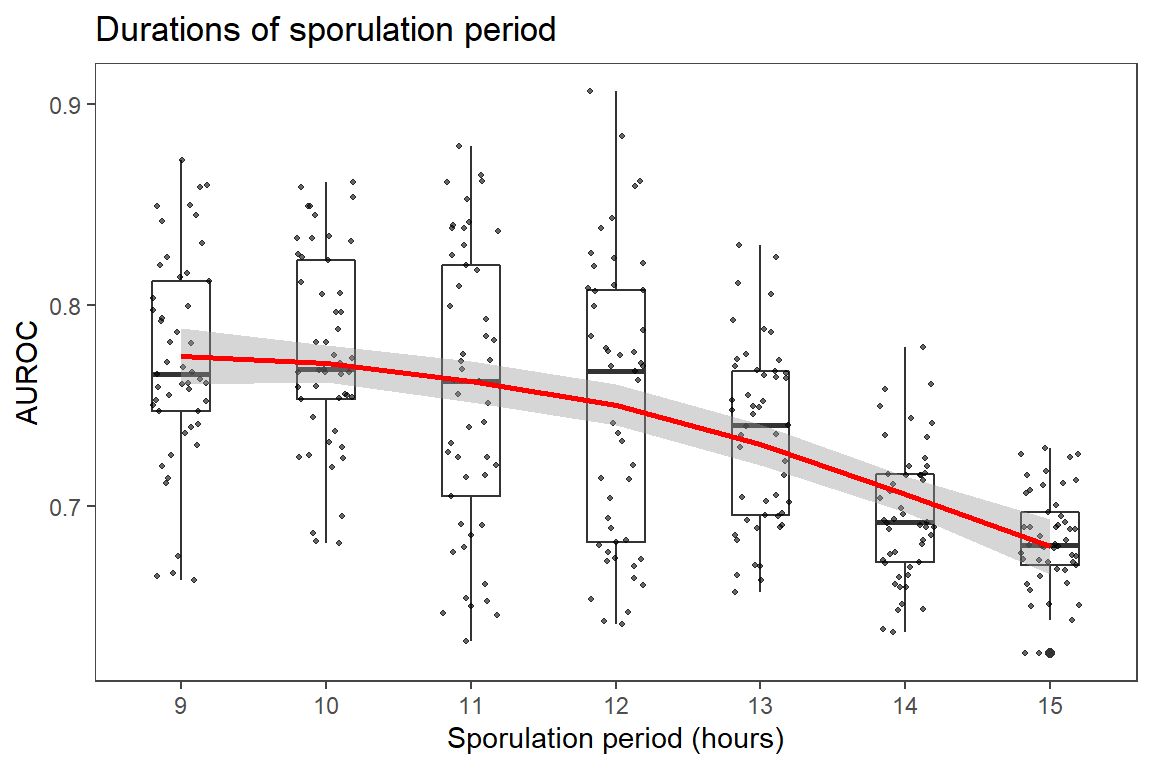

Take initial look at descriptive statistics and trend of AUROC response as a factor of each variable investigated.

p1 <-

auc_data[order(auc_data$hours), ] %>%

ggplot(., aes(factor(hours), auc)) +

geom_boxplot(width = 0.4) +

geom_jitter(

position = position_jitter(width = 0.2),

colour = "black",

alpha = 0.6,

size = 0.7

) +

ggtitle("Durations of sporulation period") +

geom_smooth(method = "loess",

se = T,

color = "red",

aes(group = 1)) +

xlab("Sporulation period (hours)") +

ylab("AUROC") +

theme_article()

p2 <-

ggplot(auc_data, aes(factor(rh_thresh), auc)) +

geom_boxplot(width = 0.4) +

geom_jitter(

position = position_jitter(width = 0.2),

colour = "black",

alpha = 0.6,

size = 0.7

) +

ggtitle("RH thresholds for sporulation and infection") +

geom_smooth(method = "loess",

se = T,

color = "blue",

aes(group = 1)) +

ylab("AUROC") +

xlab("RH threshold (%)") +

theme_article()

p3 <-

ggplot(auc_data, aes(factor(temp_thresh), auc)) +

geom_boxplot(width = 0.4) +

geom_jitter(

position = position_jitter(width = 0.2),

colour = "black",

alpha = 0.6,

size = 0.7

) +

ggtitle("Temperature thresholds for sporulation and infection") +

geom_smooth(

method = "loess",

se = T,

color = "black",

aes(group = 1),

show.legend = T

) +

xlab("Temperature threshold (°C)") +

ylab("AUROC") +

theme_article()

grDevices::png(filename = here::here("images", "init_vis.png"),

width = 400, height = 800,bg = "white", res = NA)

egg::ggarrange(p1,p2,p3, ncol = 1)

dev.off()## png

## 2p1;p2;p3

Model Fitting

lapply(auc_data[, 1:3], function(x)

sort(unique(x)))## $rh_thresh

## [1] 87 88 89 90 91 92 93

##

## $temp_thresh

## [1] 7 8 9 10 11 12 13

##

## $hours

## [1] 9 10 11 12 13 14 15Create coded data set. Seven levels of each variable are used.

cd_data <- coded.data(auc_data,

Tt ~ (temp_thresh - 10),

RHt ~ (rh_thresh - 90),

SDt ~ (hours - 12))

str(cd_data)## Classes 'coded.data' and 'data.frame': 343 obs. of 4 variables:

## $ RHt: num -3 -3 -3 -3 -3 -3 -3 -3 -3 -3 ...

## $ Tt : num 0 0 0 0 0 0 0 1 1 1 ...

## $ SDt: num -2 -1 0 1 2 3 -3 -2 -1 0 ...

## $ auc: num 0.797 0.8 0.8 0.805 0.761 ...

## - attr(*, "codings")=List of 3

## ..$ Tt :Class 'formula' language Tt ~ (temp_thresh - 10)

## .. .. ..- attr(*, ".Environment")=<environment: R_GlobalEnv>

## ..$ RHt:Class 'formula' language RHt ~ (rh_thresh - 90)

## .. .. ..- attr(*, ".Environment")=<environment: R_GlobalEnv>

## ..$ SDt:Class 'formula' language SDt ~ (hours - 12)

## .. .. ..- attr(*, ".Environment")=<environment: R_GlobalEnv>

## - attr(*, "rsdes")=List of 2

## ..$ primary: chr "Tt" "RHt" "SDt"

## ..$ call : language coded.data(data = auc_data, Tt ~ (temp_thresh - 10), RHt ~ (rh_thresh - 90), SDt ~ (hours - 12))head(cd_data)We may use non-parametric local regression (LOESS) to obtain predicted values for the 4-dimensional response surface, using RHt, SDt, and Tt, and all 3- and 2-way iterations as the predictors. We can then compare the extent of agreement between polynomial regressions and the loess regression to aid in choosing the degree of the polynomial regression, by using the concordance correlation coefficient (see Lin, 1989, (A concordance correlation coefficient to evaluate reproducibility, Biometrics 45:255–268).

lo_fit1 <- loess(auc ~ RHt * SDt * Tt, data = cd_data)

lo_pred <- predict(lo_fit1)Fit models of first to fourth order, and evaluate fits.

poly_1_fit <- lm(auc ~ poly(RHt, SDt, Tt, degree = 1), data = cd_data)

poly_2_fit <- lm(auc ~ poly(RHt, SDt, Tt, degree = 2), data = cd_data)

poly_3_fit <- lm(auc ~ poly(RHt, SDt, Tt, degree = 3), data = cd_data)

poly_4_fit <- lm(auc ~ poly(RHt, SDt, Tt, degree = 4), data = cd_data)

f_stat <-

sapply(list(poly_1_fit, poly_2_fit,poly_3_fit, poly_4_fit), function(x) summary(x)$fstatistic[1] %>% as.numeric %>% round(2))

rcompanion::compareLM(poly_1_fit,poly_2_fit,poly_3_fit,poly_4_fit)[[2]] %>%

add_column( Order = 1:4, .before = 1) %>%

add_column(., F_statistic = f_stat, .before = "p.value") %>%

rename("No. of Parameters" = Rank,

"DF" = "Df.res",

"R sq." = "R.squared",

"Adj. R sq." = "Adj.R.sq",

"F" = "F_statistic",

"p" = "p.value",

"Shapiro-Wilk" = "Shapiro.W",

"Shapiro-Wilk p" = "Shapiro.p") %>%

select(-c("AIC", "AICc", "BIC")) %>%

kable(format = "html") %>%

kableExtra::kable_styling( latex_options = "striped",full_width = FALSE) | Order | No. of Parameters | DF | R sq. | Adj. R sq. | F | p | Shapiro-Wilk | Shapiro-Wilk p |

|---|---|---|---|---|---|---|---|---|

| 1 | 4 | 339 | 0.6375 | 0.6342 | 198.68 | 0 | 0.9934 | 0.13760 |

| 2 | 10 | 333 | 0.7581 | 0.7516 | 115.98 | 0 | 0.9971 | 0.81390 |

| 3 | 20 | 323 | 0.8617 | 0.8535 | 105.89 | 0 | 0.9963 | 0.61070 |

| 4 | 35 | 308 | 0.8811 | 0.8680 | 67.12 | 0 | 0.9908 | 0.03029 |

Cubic model seems to be the best fit for our purpose. We want to get the best possible fit to the data, to understand underlying relationships between variable thresholds of our model. Hence, our priority is increase in explanatory power, for which our main guide is adjusted R2, while the distribution of residuals is still fulfilling the assumption of normality. Model of 4th order seems to provide very little gain in terms of fit Adj. R2 comparing to 3rd order, and most importantly is not over-fitting indicated by Wilks-Shapiro test for model of 4th order. Information criterion are indication high values for all fits because of structure of data set, and this information is disregarded because the model is used for interpretation of the data.

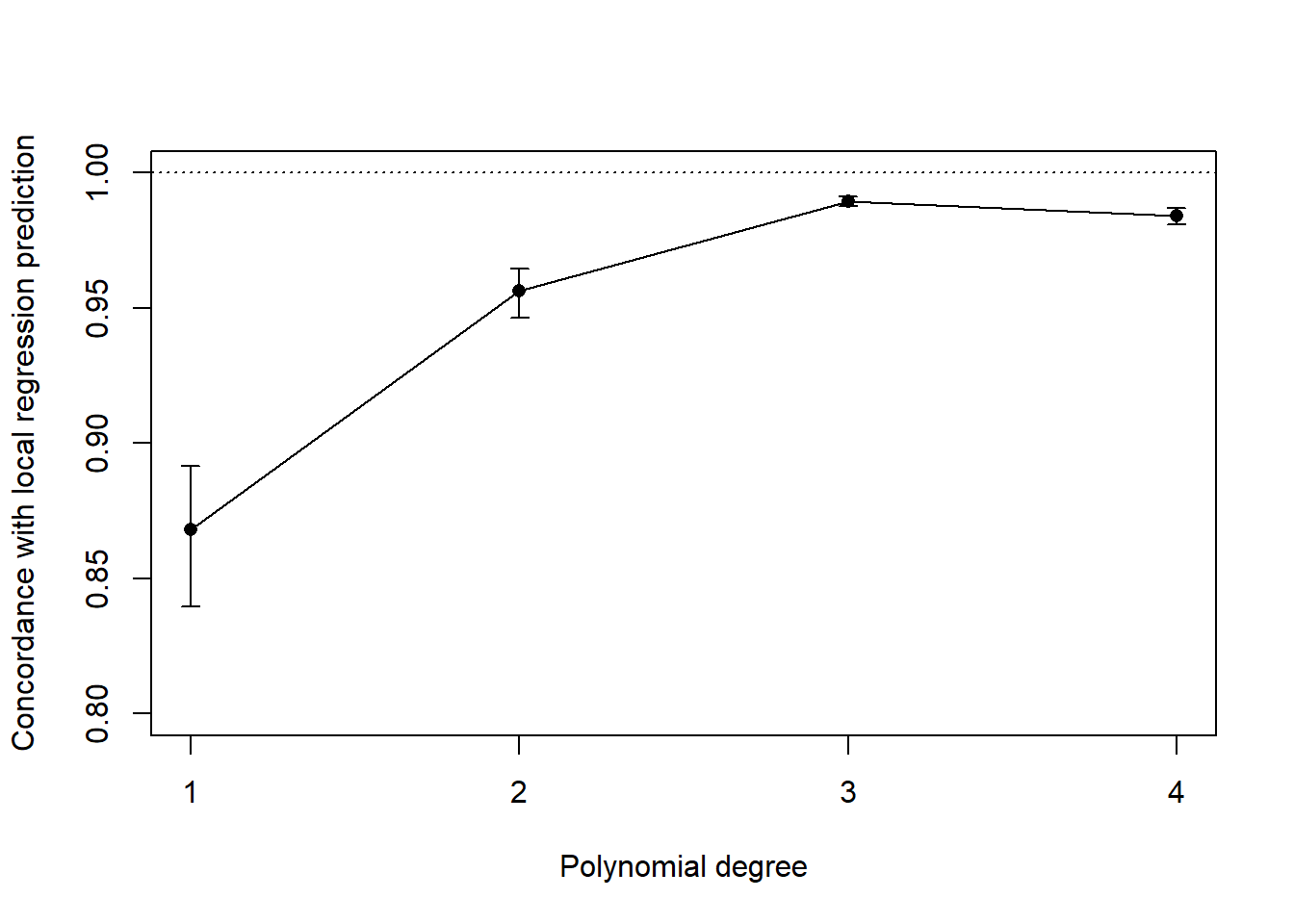

Looking at the concordance between local regression (LOESS) and polynomial models, we have:

pred_cd_data <-

data.frame(

lo_pred,

predict(poly_1_fit),

predict(poly_2_fit),

predict(poly_3_fit),

predict(poly_4_fit)

)

c1 <-

CCC(pred_cd_data$lo_pred, pred_cd_data$predict.poly_1_fit.)$rho

c2 <-

CCC(pred_cd_data$lo_pred, pred_cd_data$predict.poly_2_fit.)$rho

c3 <-

CCC(pred_cd_data$lo_pred, pred_cd_data$predict.poly_3_fit.)$rho

c4 <-

CCC(pred_cd_data$lo_pred, pred_cd_data$predict.poly_4_fit.)$rho

plot(

c(c1$est, c2$est, c3$est, c4$est),

ylim = c(.8, 1),

pch = 16,

type = "o",

ylab = "Concordance with local regression prediction",

xlab = "Polynomial degree",

xaxt = "n"

)

axis(1, 1:4, 1:4)

abline(h = 1, lty = 3)

arrows(

1:4,

c(c1$lwr.ci, c2$lwr.ci, c3$lwr.ci, c4$lwr.ci),

1:4,

c(c1$upr.ci, c2$upr.ci, c3$upr.ci, c4$upr.ci),

code = 3,

length = .05,

angle = 90

)

This reinforces the choice of the polynomial model of degree 3, which yields predictions that agree the most with the local, non-parametric regression, and hence adequately reproduces the behaviour of the response surface.

panderOptions('round', 2)

panderOptions('keep.trailing.zeros', TRUE)

pander(poly_3_fit, add.significance.stars = TRUE)| Estimate | Std. Error | t value | Pr(>|t|) | ||

|---|---|---|---|---|---|

| (Intercept) | 0.74 | 0.00 | 583.67 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)1.0.0 | -0.60 | 0.02 | -25.78 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)2.0.0 | -0.22 | 0.02 | -9.57 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)3.0.0 | 0.09 | 0.02 | 3.69 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)0.1.0 | -0.60 | 0.02 | -25.39 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)1.1.0 | 2.32 | 0.43 | 5.34 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)2.1.0 | 2.37 | 0.43 | 5.46 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)0.2.0 | -0.16 | 0.02 | -6.65 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)1.2.0 | 4.83 | 0.43 | 11.11 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)0.3.0 | 0.04 | 0.02 | 1.57 | 0.12 | |

| poly(RHt, SDt, Tt, degree = 3)0.0.1 | 0.31 | 0.02 | 13.39 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)1.0.1 | -2.92 | 0.43 | -6.73 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)2.0.1 | 2.38 | 0.43 | 5.47 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)0.1.1 | -3.60 | 0.43 | -8.29 | 0.00 | * * * |

| poly(RHt, SDt, Tt, degree = 3)1.1.1 | 21.18 | 8.05 | 2.63 | 0.01 | * * |

| poly(RHt, SDt, Tt, degree = 3)0.2.1 | 0.37 | 0.43 | 0.86 | 0.39 | |

| poly(RHt, SDt, Tt, degree = 3)0.0.2 | -0.04 | 0.02 | -1.87 | 0.06 | |

| poly(RHt, SDt, Tt, degree = 3)1.0.2 | 0.46 | 0.43 | 1.07 | 0.29 | |

| poly(RHt, SDt, Tt, degree = 3)0.1.2 | -0.83 | 0.43 | -1.92 | 0.06 | |

| poly(RHt, SDt, Tt, degree = 3)0.0.3 | -0.13 | 0.02 | -5.47 | 0.00 | * * * |

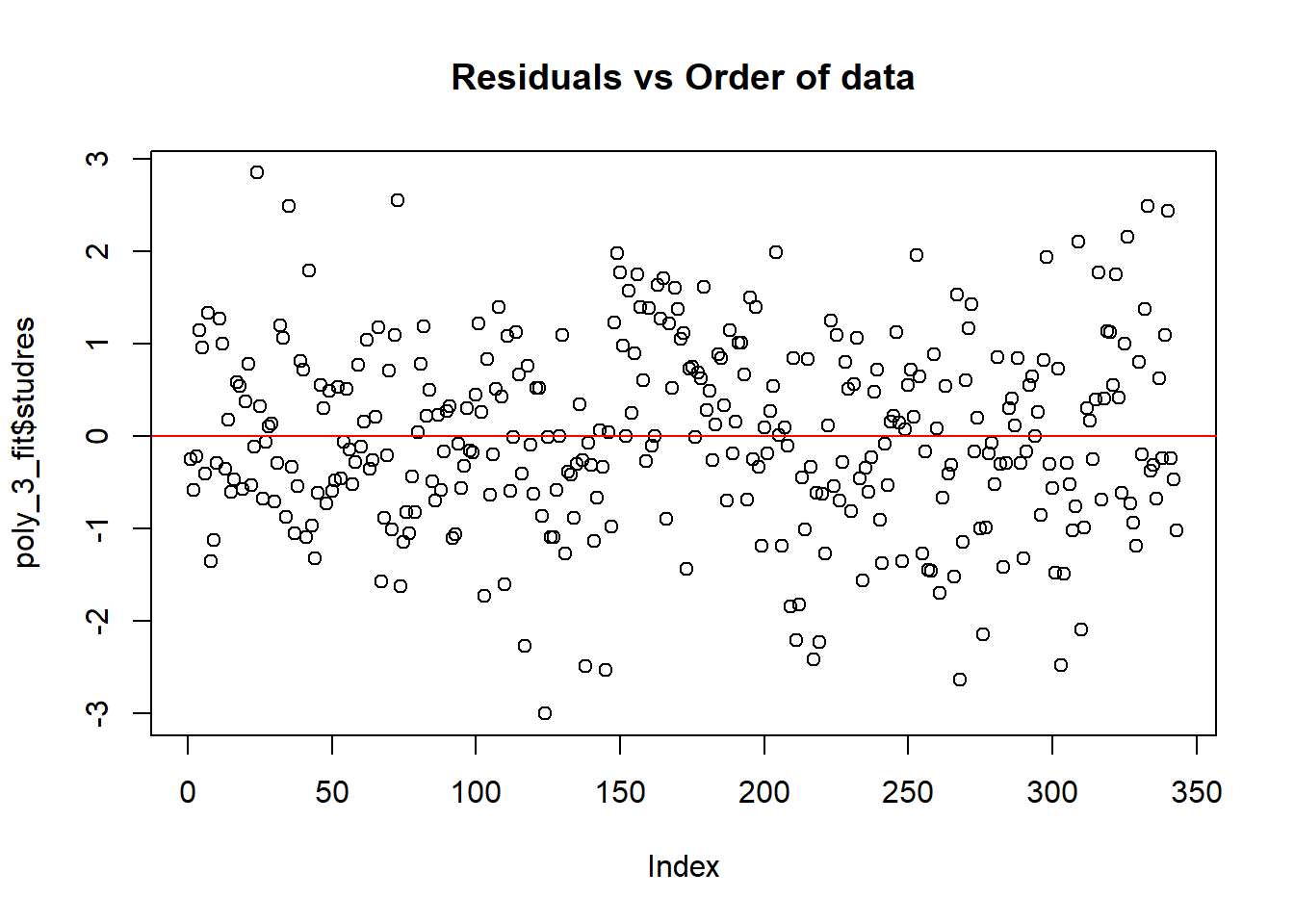

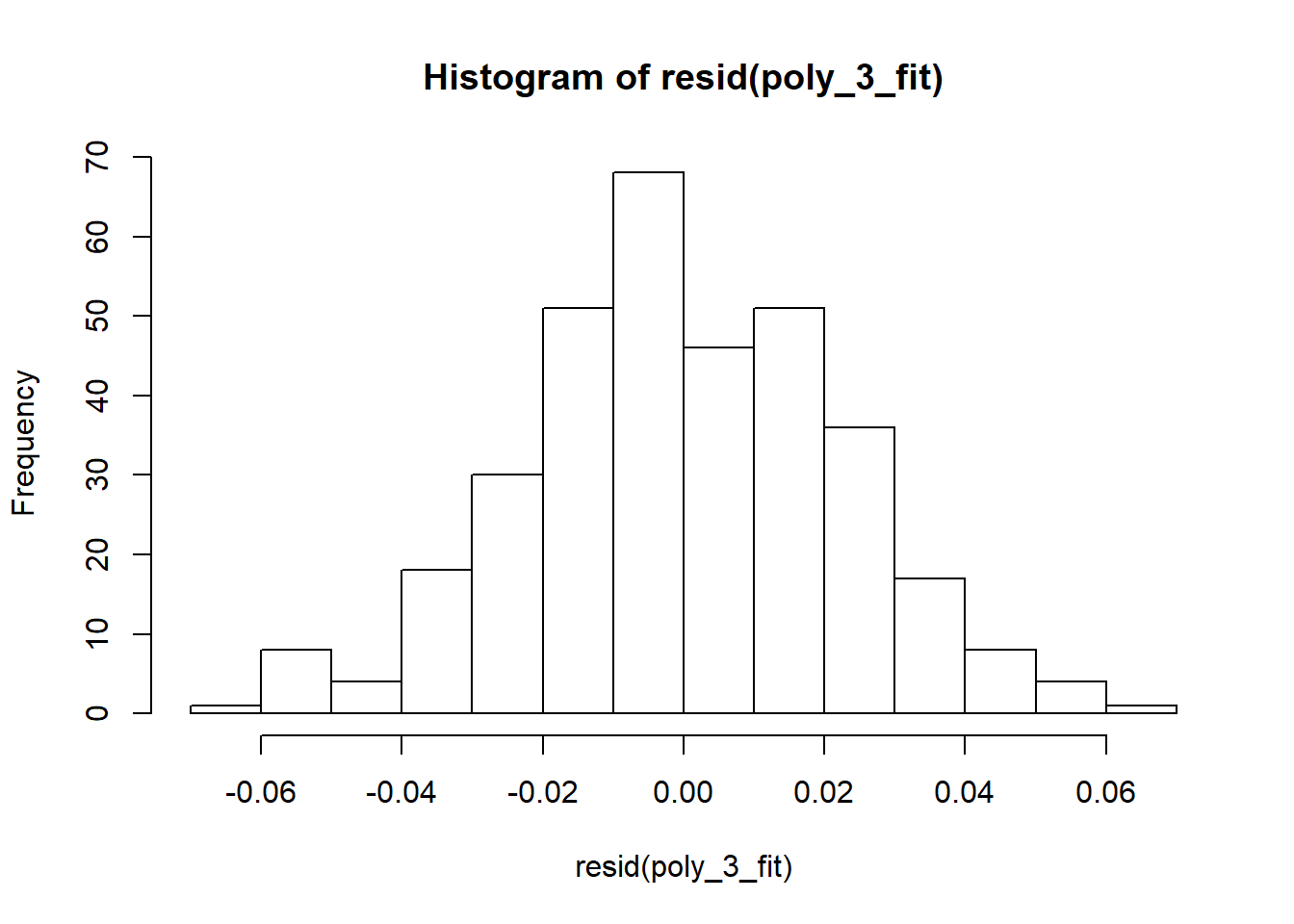

Evaluate model fit with diagnostic plot.

poly_3_fit$studres <- rstudent(poly_3_fit)

plot(poly_3_fit$studres, main = "Residuals vs Order of data")

abline(h = 0, col = "red")

hist(resid(poly_3_fit)) #distriburion of residuals should be approximately normal

Assumptions of normality of residuals are fulfilled.

Extract the model formula. The code has been borrowed from this StackOverflow thread

processPolyNames = function(coef) {

members = strsplit(mgsub::mgsub(coef, c("poly\\(", ", degre.*"), c("", "")), ", ")[[1]]

degree = as.numeric(strsplit(strsplit(coef, ")")[[1]][2], "\\.")[[1]])

coef_out = ""

for (d in seq_along(degree)) {

if (degree[d] == 0)

next

if (degree[d] == 1) {

if (coef_out == "") {

coef_out = members[d]

} else {

coef_out = paste0(coef_out, "*", members[d])

}

} else {

if (coef_out == "") {

coef_out = paste0(members[d], "^", degree[d], "^")

} else {

coef_out = paste0(coef_out, "*", members[d], "^", degree[d], "^")

}

}

}

return(coef_out)

}

coefs = summary(poly_3_fit)$coef[, 1]

prettyNames = lapply(names(coefs)[-1], processPolyNames)

prettyModel = ""

for (i in seq_along(coefs)) {

if (i == 1) {

prettyModel = paste0(prettyModel, round(coefs[i], 2))

} else {

prettyModel = paste0(prettyModel,

ifelse(coefs[i] >= 0, " + ", " "),

round(coefs[i], 2),

"*",

prettyNames[[i - 1]])

}

}

prettyModel <- paste("AUROC =", gsub("-", "- ", prettyModel))

cat(prettyModel)## AUROC = 0.74 - 0.6*RHt - 0.22*RHt^2^ + 0.09*RHt^3^ - 0.6*SDt + 2.32*RHt*SDt + 2.37*RHt^2^*SDt - 0.16*SDt^2^ + 4.83*RHt*SDt^2^ + 0.04*SDt^3^ + 0.31*Tt - 2.92*RHt*Tt + 2.38*RHt^2^*Tt - 3.6*SDt*Tt + 21.18*RHt*SDt*Tt + 0.37*SDt^2^*Tt - 0.04*Tt^2^ + 0.46*RHt*Tt^2^ - 0.83*SDt*Tt^2^ - 0.13*Tt^3^rm(processPolyNames, coefs, prettyNames)AUROC = 0.74 - 0.6RHt - 0.22RHt2 + 0.09RHt3 - 0.6SDt + 2.32RHtSDt + 2.37RHt2SDt - 0.16SDt2 + 4.83RHtSDt2 + 0.04SDt3 + 0.31Tt - 2.92RHtTt + 2.38RHt2Tt - 3.6SDtTt + 21.18RHtSDtTt + 0.37SDt2Tt - 0.04Tt2 + 0.46RHtTt2 - 0.83SDtTt2 - 0.13Tt3

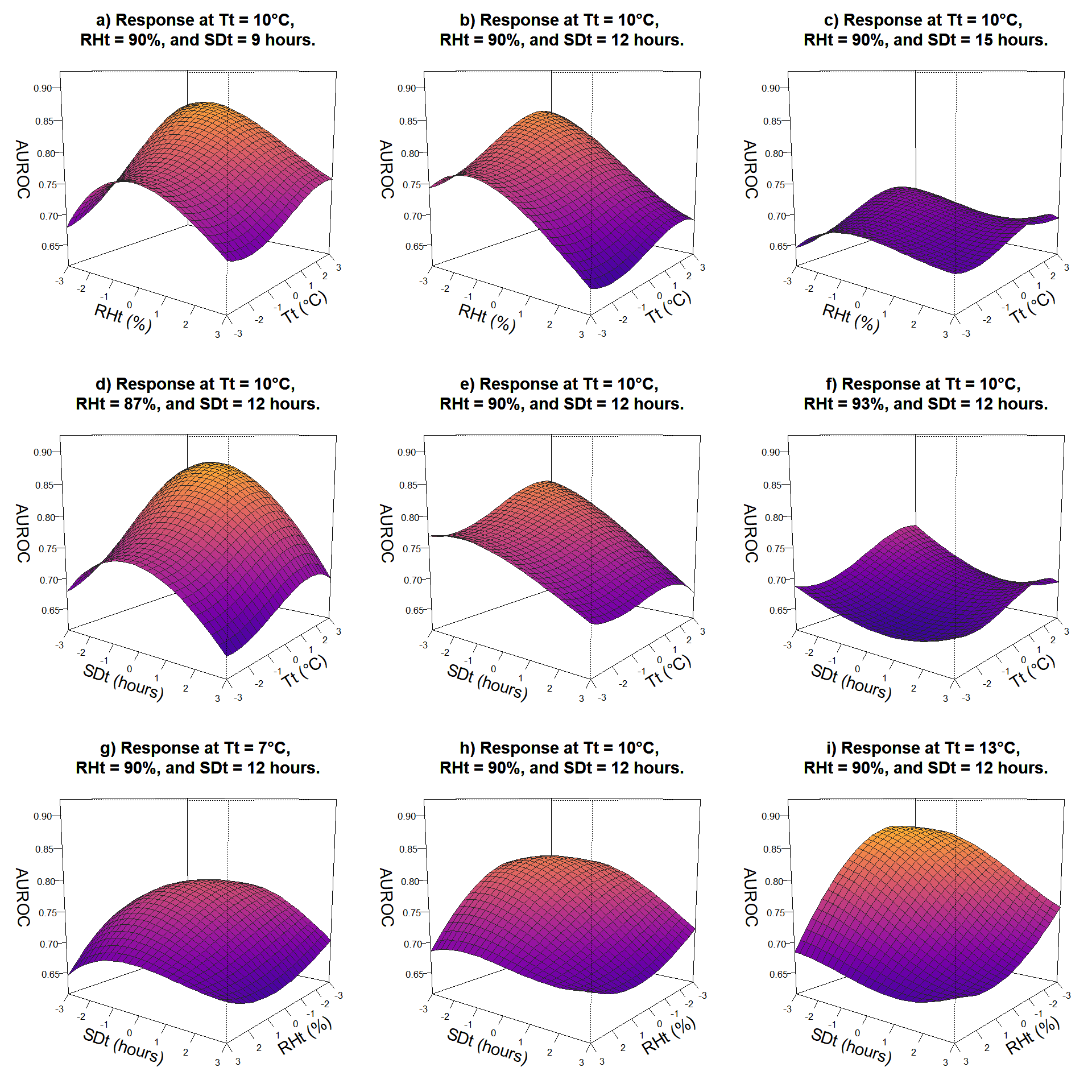

Surface Plot of Model Fits

Visualise fitted response surface.

z_min <- min(auc_data$auc) - c(min(auc_data$auc) * 0.02)

z_max <- max(auc_data$auc) + c(max(auc_data$auc) * 0.02)

# use a viridis palette for usability

library(viridis)

color_var <- plasma(256)

par(mar = c(1.5, 4.5, 3.5, 1.5),

mfrow = c(3, 3),

cex = 0.5)

par_cut <- data.frame(

Tt = c(rep(0, 6), -3, 0, +3),

RHt = c(rep(0, 3), -3, 0, +3, rep(0, 3)),

SDt = c(-3, 0, +3, rep(0, 6))

)

plot_ls <- list()

for (i in seq(nrow(par_cut))) {

persp(

poly_3_fit,

if (i <= 3) {

~ RHt + Tt

} else if (i %in% 4:6) {

~ SDt + Tt

} else if (i >= 7) {

~ SDt + RHt

}

,

at = data.frame(par_cut[i, ]),

zlab = "\nAUROC",

xlab = if (i <= 3) {

c("RHt (%)", "Tt (°C)")

} else if (i %in% 4:6) {

c("SDt (hours)", "Tt (°C)")

} else if (i >= 7) {

c("SDt (hours)", "RHt (%)")

},

col = color_var,

zlim = c(z_min, z_max),

theta = if(i %in% c(1:6)){37}else{ 127},

phi = 10,

border = "grey15",

lwd = 0.5,

cex.main = 1.8,

cex.axis = 1.06,

cex.lab = 1.8,

main = paste0("\n",letters[i],") ", "Response at ",

"Tt = ", par_cut[i,1] + 10,"°C, ",

"\n",

"RHt = ", par_cut[i,2] + 90, "%, ",

"and SDt = ", par_cut[i,3] +12, " hours."

)

)

}

Plots show relationship between variation in parameters of two variables with third variable fixed at three levels and response as AUROC. 3D surface plots indicate that reduced sporulation duration and relative humidity threshold improves accuracy of the model; while model versions with increased temperature threshold have better diagnostic performance.

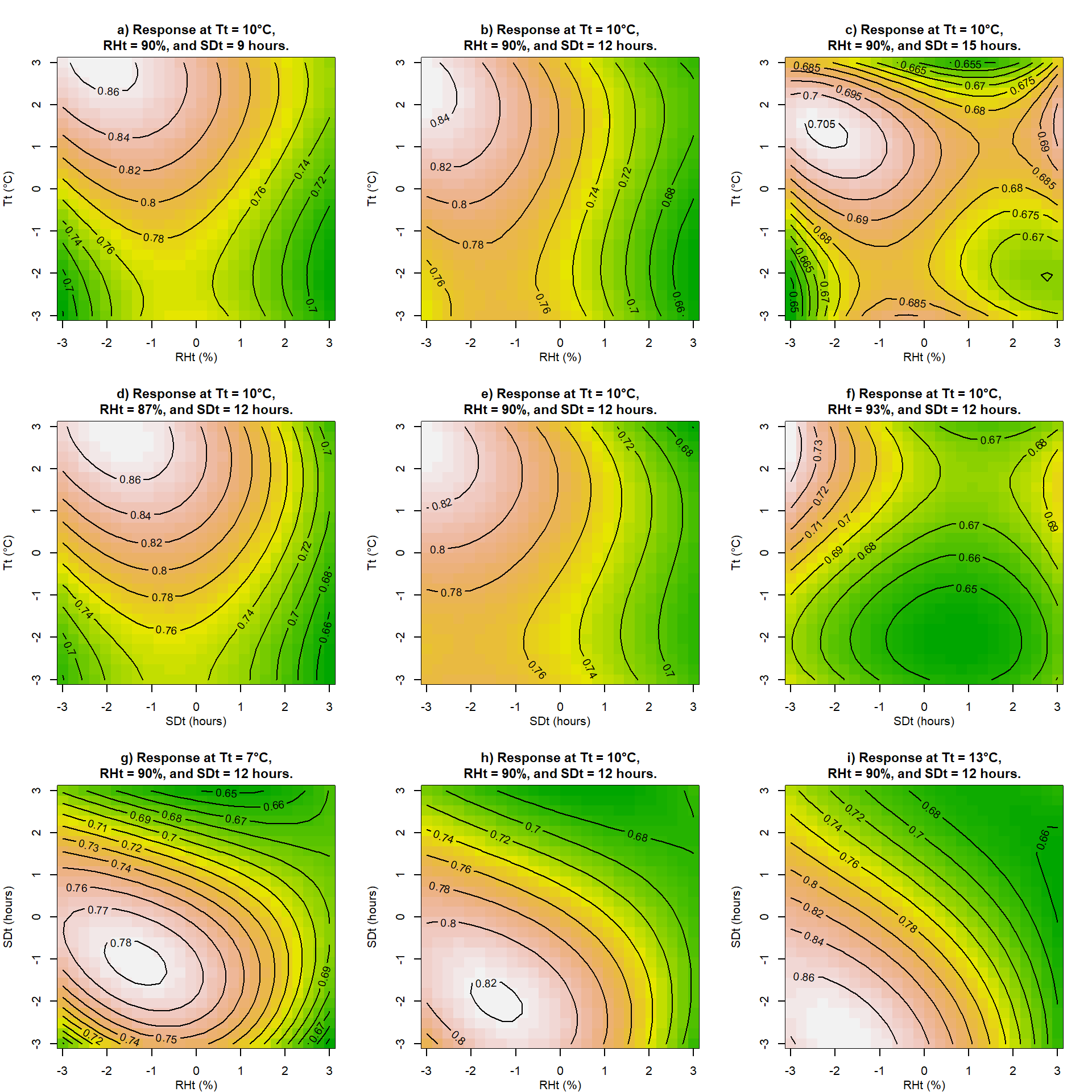

Another way to present this data is with contour plots. We will plot contour plots corresponding to the above 3D surfaces.

par(

mar = c(3, 4, 4, 2),

mfrow = c(3, 3),

cex.main = 1.2,

cex.axis = 1.06,

cex.lab = 1.1)

# c(5, 4, 4, 2)

for (i in seq(nrow(par_cut))) {

contour(poly_3_fit,

if (i <= 3) {

~ RHt + Tt

} else if (i %in% 4:6) {

~ SDt + Tt

} else if (i >= 7) {

~ SDt + RHt

}

,

image=TRUE,

at = data.frame(par_cut[i,]),

zlab = "\nAUROC",

xlab = if (i <= 3) {

c("RHt (%)","Tt (°C)")

} else if (i %in% 4:6) {

c("SDt (hours)", "Tt (°C)")

} else if (i >= 7) {

c("SDt (hours)", "RHt (%)")

},

cex.main = 1.1,

cex.axis = 1,

cex.lab = 1,

main = paste0("\n",letters[i],") ", "Response at ",

"Tt = ", par_cut[i,1] + 10,"°C,",

"\n",

"RHt = ", par_cut[i,2] + 90, "%, ",

"and SDt = ", par_cut[i,3] +12, " hours."

)

)

}

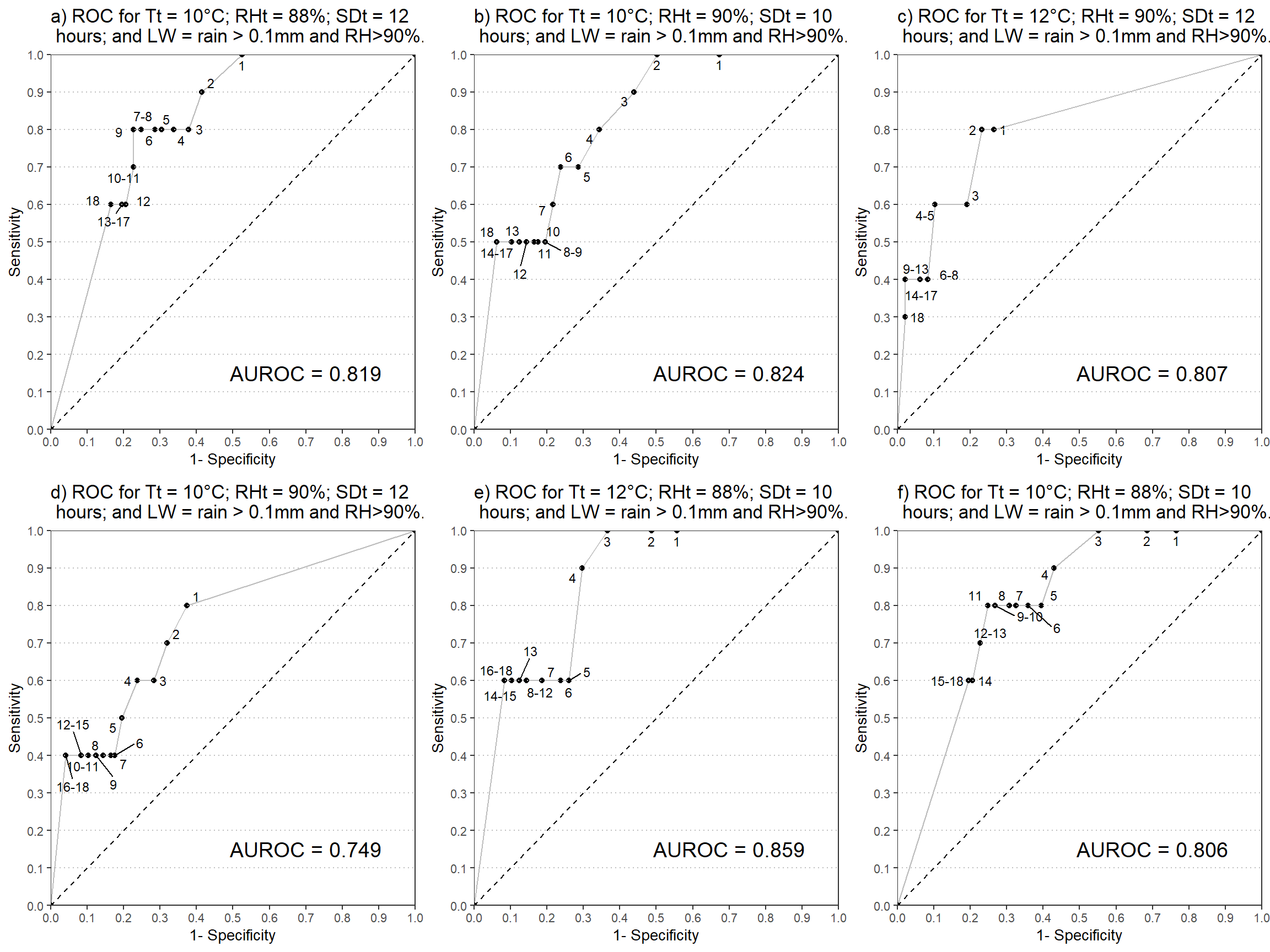

Further Investigation

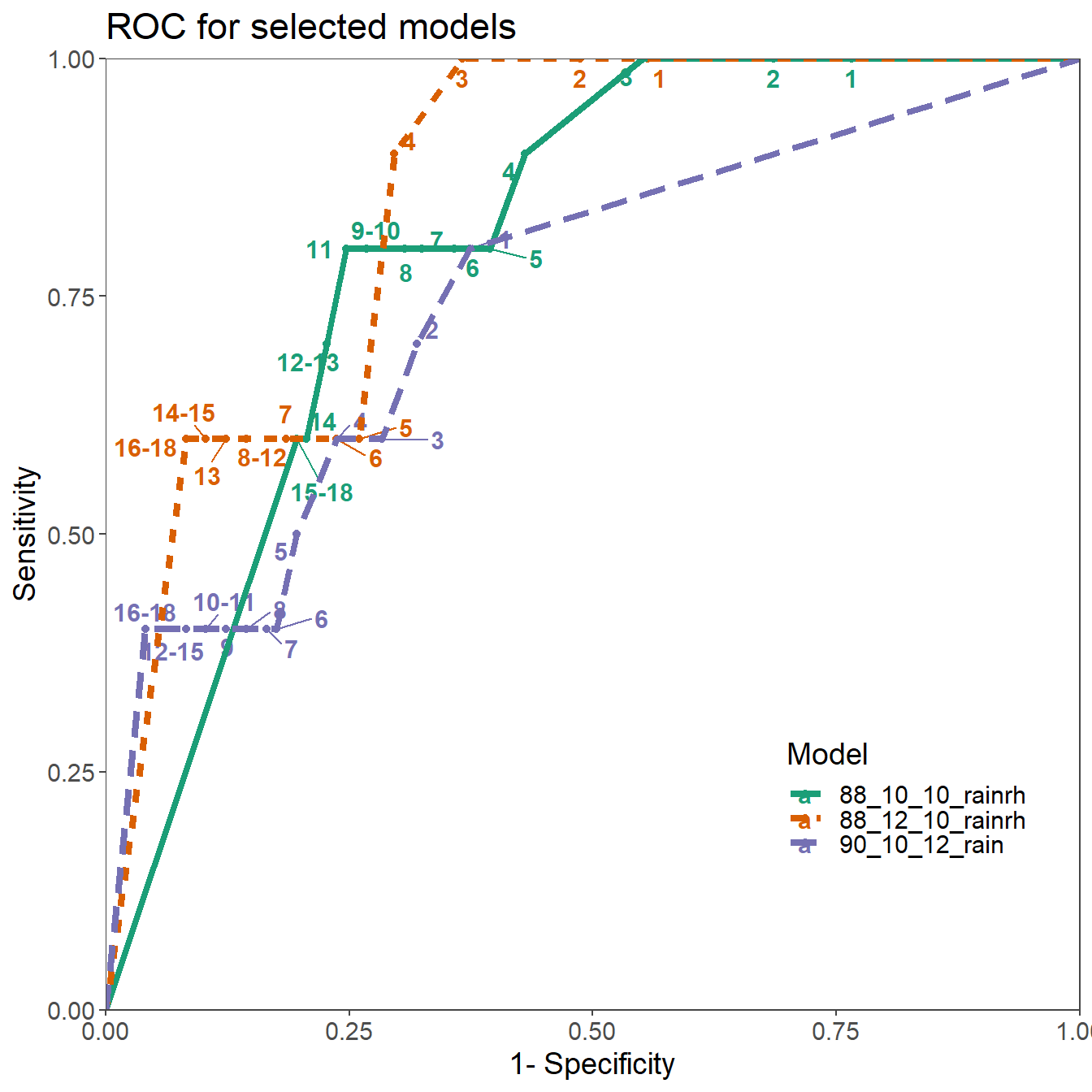

Based on the sensitivity analysis following model parameterisations were compared to the original parameters of Irish Rules and further analysed:

#Load function and the data

load(file = here::here("data", "op_2007_16", "ROC_data.RData"))

load(file = here::here("data", "op_2007_16", "AUROC_data.RData"))

load(file = here::here("data", "op_2007_16", "PlotROC.RData"))

mod_list <- list(ROC_data[["88_10_12_rainrh"]],

ROC_data[["90_10_10_rainrh"]],

ROC_data[["90_12_12_rainrh"]],

ROC_data[["90_10_12_rain"]],

ROC_data[["88_12_10_rainrh"]],

ROC_data[["88_10_10_rainrh"]])

pl <- map2(mod_list, c(1:length(mod_list)), PlotROC)

plot_grid(pl[[1]],

pl[[2]],

pl[[3]],

pl[[4]],

pl[[5]],

pl[[6]],

ncol = 3)

ls_roc <- lapply(mod_list[4:6], function(x) {

df <- x[rev(x$cut_point), ]

#append rows for plotting

x <- rep(NA, ncol(df))

df <- rbind(x, df)

df[nrow(df) + 1, ] <- NA

df$model <- unique(df$model[!is.na(df$model)])

df[1, c("sens", "one_min_spec")] <- 0

df[nrow(df), c("sens", "one_min_spec")] <- 1

#Condense labels for a single cutoff point

df <-

df %>%

group_by(one_min_spec, sens, model) %>%

summarise(cut_point = ifelse(

all(is.na(cut_point)),

"",

range(cut_point, na.rm = TRUE) %>%

unique() %>%

paste(collapse = "-")

)) %>%

ungroup()

return(df)

})

df <- bind_rows(ls_roc)

rename(df, Model = model) %>%

ggplot(aes(

one_min_spec,

sens,

group = Model,

color = Model,

label = cut_point

)) +

geom_point() +

geom_text_repel(size = 4,

fontface = "bold") +

geom_line(size = 1.5,

aes(lty = Model)) +

scale_colour_brewer(palette = "Dark2") +

scale_y_continuous(limits = c(0, 1),

expand = c(0, 0),

name = "Sensitivity") +

scale_x_continuous(limits = c(0, 1),

expand = c(0, 0),

name = "1- Specificity") +

theme_article() +

theme(

text = element_text(size = 14),

legend.position = c(.95, .15),

legend.justification = c("right", "bottom"),

) +

ggtitle("ROC for selected models")

IR have failed to indicate any risk in 2 and the warning threshold of 12 hour was reached in only 4 years out of 10.