Libraries

Packages needed for the analysis are loaded. If the libraries do not exist locally, they will be downloaded.

list.of.packages <-

c(

"tidyverse",

"readxl",

"data.table",

"lubridate",

"knitr",

"padr",

"readr",

"devtools",

"pracma",

"remotes",

"parallel",

"pbapply",

"ggrepel",

"ggthemes",

"egg",

"R.utils",

"rcompanion",

"mgsub",

"here",

"stringr",

"kableExtra",

"pander"

)

new.packages <-

list.of.packages[!(list.of.packages %in% installed.packages()[, "Package"])]

#Download packages that are not already present in the library

if (length(new.packages))

install.packages(new.packages, force = TRUE, dependencies = TRUE)

packages_load <-

lapply(list.of.packages, require, character.only = TRUE)

#Print warning if there is a problem with installing/loading some of packages

if (any(as.numeric(packages_load) == 0)) {

warning(paste("Package/s: ", paste(list.of.packages[packages_load != TRUE], sep = ", "), "not loaded!"))

} else {

print("All packages were successfully loaded.")

}## [1] "All packages were successfully loaded."rm(list.of.packages, new.packages, packages_load)The Rationale

The crop risk prediction model is useful only if it provides same level of protection as the standard practice, while reducing necessary costs and labour. It was evident that the Irish Rules with their default variable thresholds were to conservative and failed to prove as sustainable solution for the disease forecasting.

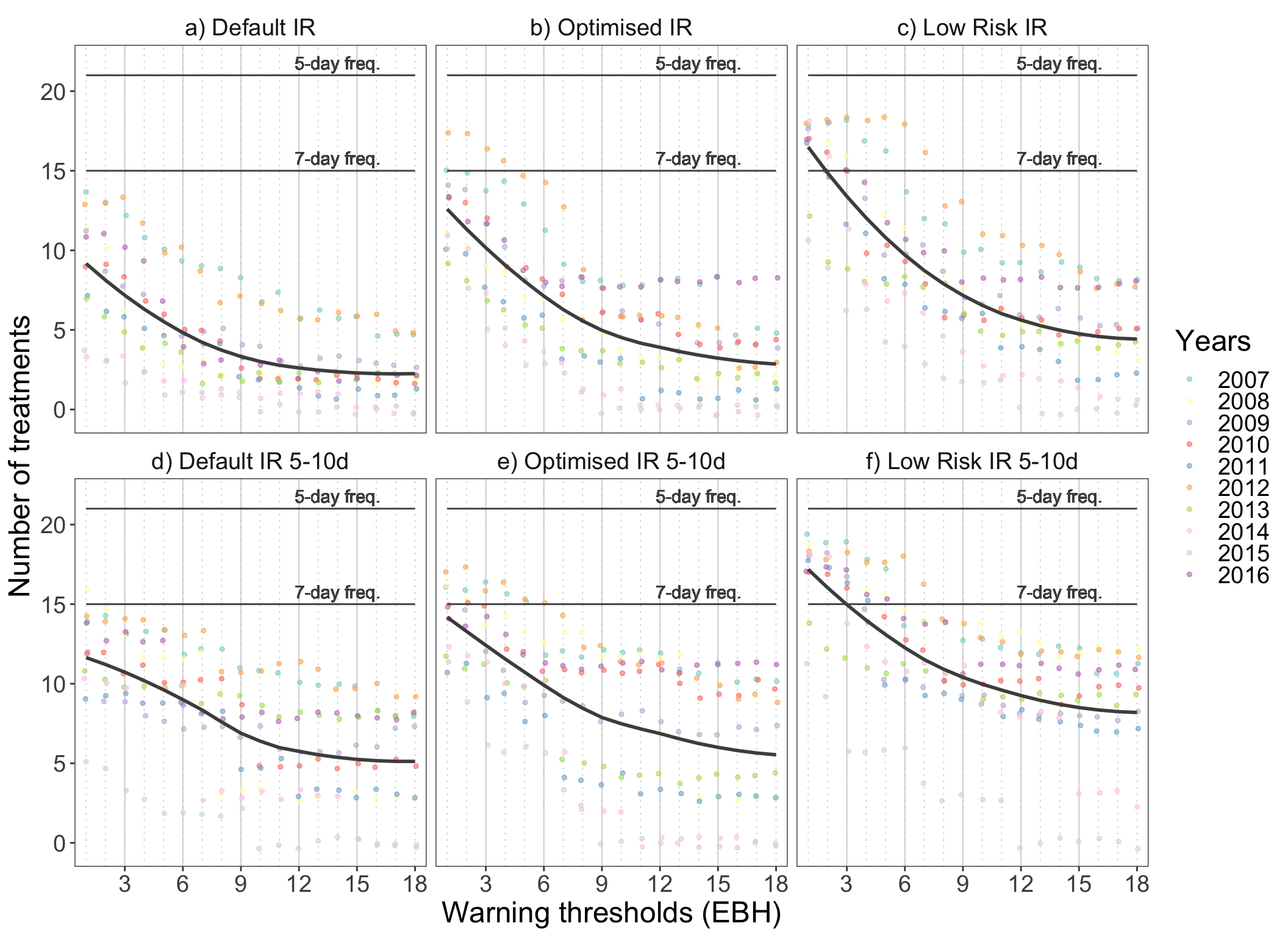

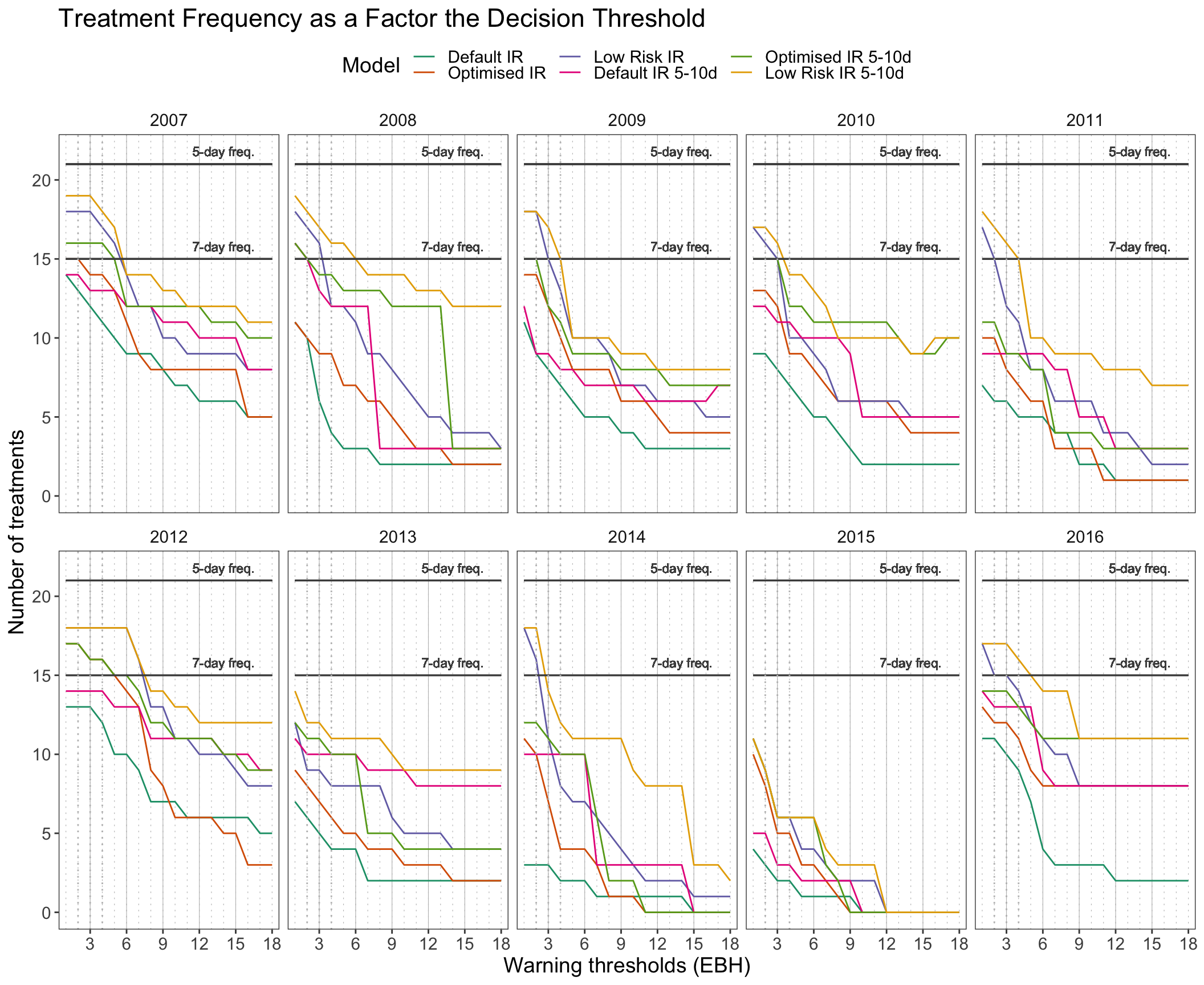

Two alternative model parameterisations are proposed and in this section we will implement a theoretical study to evaluate the usefulness with regard to their their potential to save fungicide applications. The performance is evaluated in two ways, as a reduction of:

- Number of treatments:

With minimum window of 5 days between treatments

With minimum of 5 and maximum of 10 days between treatments

- Dose reduction based on 7 day calendar treatment

Cut-off Dates

Soil temperature data.

soildf <-

read_csv(

here::here("tmp", "agridata_375.csv"),

col_types = cols(X1 = col_skip(), X10 = col_skip()),

skip = 7

)

soildf$date = as.Date(soildf$date, format = "%d-%b-%Y", tz = "UTC")

soildf <-

tidyr::separate(soildf,

"date",

c("year_var", "month", "day"),

sep = "-",

remove = F)

soildf$doy <- yday(soildf$date)

soildf[, 2:5] <- lapply(soildf[, 2:5], as.numeric)We assume that the planting starts day after the daily average soil temperature becomes higher than 8°C for three consecutive days in the beginning of April. This is common practice in Ireland, recommended by Teagasc.

soildf <- soildf[with(soildf, month >= 4), ]

dfls <- base::split(soildf, soildf$year_var)

dates_cut <-

lapply(dfls, function(x) {

criteria <- as.numeric(x$soil > 8)

#cumulative sum days matching the criteria

criteria_sum <-

stats::ave(criteria, cumsum(criteria == 0), FUN = cumsum)

pl_date <- x[match(3, criteria_sum) + 1, "date"]

data.frame("year" = unique(year(x$date)),

date = pl_date[1, 1])

}) %>%

bind_rows() %>%

rename("plant_date" = date)

rm(soildf, dfls)The data for 2007 is missing so we shall use the mean date from other years.

dates_cut$plant_doy <- yday(dates_cut$plant_date)

dates_cut <-

bind_rows(

list(

dates_cut,

data.frame(

year = 2007,

plant_date = as.Date(strptime(paste("2007", mean(dates_cut$plant_doy, na.rm = TRUE)), format = "%Y %j")),

plant_doy = mean(dates_cut$plant_doy)

)

))We will assume that the full emergence takes 3 and additional minimum of 2 weeks for full emergence and for the first plants to start meeting in the rows.

meet_in_rows <- 5 * 7

dates_cut$prot_start <- with(dates_cut, plant_doy + meet_in_rows)

dates_cut$prot_start <- paste(dates_cut$year,

dates_cut$prot_start,

sep = "-") %>%

strptime(format = "%Y-%j") %>%

as.Date()For the purposes of this study we will assume that the growing season lasts 120 days. However, the pesticide protection lasts for at least another three weeks to allow the tuber growth.

duration_of_season <- 120 + 21

dates_cut$season_end <-

with(dates_cut, plant_doy + duration_of_season)

dates_cut$season_end <-

paste(dates_cut$year,

dates_cut$season_end,

sep = "-") %>%

strptime(format = "%Y-%j") %>%

as.Date()

dates_cutLoad the weather data with model outputs.

load(file = here::here("data", "op_2007_16", "OP_for_analysis.RData"))

years <- unique(OP$year_var)

df <- filter(OP, month >= 2 & month <= 9)

rm(OP)

df <-

df[, c(

"date",

"year_var",

"short_date",

"week_var",

"doy",

"rain",

"temp",

"rhum",

"90_10_12_rain_ebh",

"88_12_10_rainrh_ebh",

"88_10_10_rainrh_ebh"

)]

#Keep only columns needed for faster code execution

df <- dplyr::rename(df,

ir = "90_10_12_rain_ebh",

ir_opt = "88_12_10_rainrh_ebh",

ir_low_risk = "88_10_10_rainrh_ebh")Treatment Frequency: Model vs. Calendar

Calculate the number of calendar treatments, assuming they would start 5 weeks and end 141 days after the planting

(calendar_five <- round(c(duration_of_season - 5 * 7) / 5, 0))## [1] 21(calendar_seven <- round(c(duration_of_season - 5 * 7) / 7, 0))## [1] 15We are assuming that a fungicide treatment is activated every time the warning threshold is reached. Minimum period until the next treatment is 5 days. Cumulative sum of recommended treatments is calculated per season.

ControlFreqFun <-

function(y,

weather_data,

dates_cut,

min_prot_dur = NULL,

max_prot_dur = NULL) {

#Set the warning threshold and run the rest of the script

warning_threshold <- y

#Weather and model output data

fun_df <- weather_data

#Each warning would cause treatment that will keep the plants protected for a period of time

min_prot_dur <-

ifelse(is.null(min_prot_dur), 5, min_prot_dur)#If not defined default value is 5 days

max_prot_dur <-

ifelse(is.null(max_prot_dur), 10, max_prot_dur)#If not defined default value is 10 days

#A function to subset the data for the period of interest in each year

test.overlap = function(vals, start_date, end_date) {

rowSums(mapply(function(a, b)

between(vals, a, b),

start_date, end_date)) > 0

}

#Subset the of the data for the duration of 'protection' period for each year

trt_df <-

fun_df %>%

filter(

test.overlap(

short_date,

dates_cut$prot_start,

dates_cut$season_end + max_prot_dur #Additional days for risk estimation and timing of treatments

)

) %>%

dplyr::select(

ends_with("year_var"),

ends_with("week_var"),

ends_with("doy"),

starts_with("ir")

) %>%

group_by(year_var) %>%

#if there was an accumulation from previous day, it would triger a warning

#Check all of the first five rows because of possible break of 5 hours

mutate_at(., .vars = colnames(.[grep("ir", colnames(.))]),

funs(

ifelse(row_number() <= 5 & . >= warning_threshold,

warning_threshold, .)

)) %>%

#all five values all changed so we have to delete 4 of them and leave only 1

mutate_at(., .vars = colnames(.[grep("ir", colnames(.))]),

funs(ifelse(

row_number() <= 4 & . == warning_threshold, 0, .

))) %>%

# Change values coresponding to the warning threshold to 1 for calculating the sum

mutate_at(., .vars = colnames(.[grep("ir", colnames(.))]),

funs(ifelse(. == warning_threshold, 1, 0))) %>%

group_by(year_var, week_var, doy) %>%

summarise_at(., .vars = colnames(.[grep("ir", colnames(.))]), .funs = sum)

#####################################################################

#Calculate the number of treatments with with minimum protection duration of 5 days

TreatmentWindowOne <-

function(model_output,

min_prot_dur ,

max_prot_dur) {

# model_output <- trt_df_one[[1]]$ir

y <- model_output

for (i in seq_along(1:c(length(y) - max_prot_dur))) {

#Following treatment will be implemented on day 5 if there is 1 in the next five days

if (y[i] == 1 & sum(y[c(i + 1):c(i + min_prot_dur)]) > 0) {

y[i + min_prot_dur] <- 1

y[c(i + 1):c(i + c(min_prot_dur - 1))] <- 0

}

}

#Add zeros for the duration of added period

y[c(length(y) - max_prot_dur):length(y)] <- 0

y

}

#Apply function on yearly subsets

trt_df_one <-

split(trt_df, trt_df$year_var)

for (year in seq_along(trt_df_one)) {

x <- trt_df_one[[year]]

trt_df_one[[year]] <-

lapply(x[grep("ir", colnames(x))], function(x)

TreatmentWindowOne(x, min_prot_dur , max_prot_dur)) %>%

bind_cols()

}

trt_df_one <- bind_rows(trt_df_one)

trt_df_one <- bind_cols(trt_df["year_var"], trt_df_one)

#####################################################################

#Calculate the number of treatments with 5-10 day sliding treatment interval

TreatmentWindow <-

function(model_output,

min_prot_dur ,

max_prot_dur) {

x <- model_output

#attach vector, 10 days of 0

y <- vector(mode = "numeric", length = length(x))

# find the first treatment

y[which(x == 1)[1]] <- 1

for (i in seq_along(1:c(length(y) - max_prot_dur))) {

#Following treatment will be implemented on day 5 if there is 1 in the next five days

if (y[i] == 1 & sum(x[c(i + 1):c(i + 5)]) > 0) {

y[i + 5] <- 1

}

#Or on any following day with risk threshold reached

if (y[i] == 1 &

sum(x[c(i + 1):c(i + 5)]) == 0 & x[i + 6] == 1) {

y[i + 6] <- 1

}

if (y[i] == 1 &

sum(x[c(i + 1):c(i + 6)]) == 0 & x[i + 7] == 1) {

y[i + 7] <- 1

}

if (y[i] == 1 &

sum(x[c(i + 1):c(i + 7)]) == 0 & x[i + 8] == 1) {

y[i + 8] <- 1

}

if (y[i] == 1 &

sum(x[c(i + 1):c(i + 8)]) == 0 & x[i + 9] == 1) {

y[i + 9] <- 1

}

#If warning threshold was not reached at all or 10th day, then spray on 10th day

if (y[i] == 1 & sum(x[c(i + 1):c(i + 9)]) == 0) {

y[i + 10] <- 1

}

#if there was no treatment

if (i == c(length(y) - max_prot_dur))

break

}

#Add zeros for the duration of added period

y[c(length(y) - max_prot_dur):length(y)] <- 0

y

}

#If the threshold is reached twive within a single day reset the value to 1 to enable the counter

trt_df[, grep("ir", colnames(trt_df))] <-

lapply(trt_df[, grep("ir", colnames(trt_df))], function(x)

ifelse(x > 1, 1, x))

trt_df_window <-

split(trt_df, trt_df$year_var)

# Calculate number of treatments per year

for (year in seq_along(trt_df_window)) {

x <- trt_df_window[[year]]

trt_df_window[[year]] <-

lapply(x[grep("ir", colnames(x))], function(x)

TreatmentWindow(x, min_prot_dur, max_prot_dur)) %>%

bind_cols() %>%

rename_all(., .funs = funs(paste0("w_", .)))

}

trt_df_window <- bind_rows(trt_df_window)

#Combine the data for both scenarios

trt_df <- bind_cols(trt_df_one, trt_df_window)

#Calculate number of treatmetns per year

sum_df <-

trt_df %>%

group_by(year_var) %>%

summarise_at(colnames(trt_df[, grep("ir", colnames(trt_df))]),

.funs = sum)

sum_df$warning_thres <- warning_threshold

return(sum_df)

}Calculate the number of treatments for each warning threshold (EBH). Once again, we will use power of parallel processing for this task.

#select max warning threshold

warning_thresholds <- 1:18

begin <- Sys.time()

#Detect the number of cores and set it to total minus 1, if there are multiple cores, to avoid overload

cores <- ifelse(detectCores() > 1, detectCores() - 1, 1)

cl <- makeCluster(cores)

clusterExport(cl, c("df", "dates_cut", "ControlFreqFun"))

clusterEvalQ(cl, library("tidyverse", quietly = TRUE, verbose = FALSE))## [[1]]

## [1] "forcats" "stringr" "dplyr" "purrr" "readr"

## [6] "tidyr" "tibble" "ggplot2" "tidyverse" "stats"

## [11] "graphics" "grDevices" "utils" "datasets" "methods"

## [16] "base"

##

## [[2]]

## [1] "forcats" "stringr" "dplyr" "purrr" "readr"

## [6] "tidyr" "tibble" "ggplot2" "tidyverse" "stats"

## [11] "graphics" "grDevices" "utils" "datasets" "methods"

## [16] "base"

##

## [[3]]

## [1] "forcats" "stringr" "dplyr" "purrr" "readr"

## [6] "tidyr" "tibble" "ggplot2" "tidyverse" "stats"

## [11] "graphics" "grDevices" "utils" "datasets" "methods"

## [16] "base"control_eval <- pbapply::pblapply(warning_thresholds, function(x)

{

xx <-

ControlFreqFun(x,

df,

dates_cut,

min_prot_dur = 5,

max_prot_dur = 10)

return(xx)

},

cl = cl)

begin - Sys.time() #check the duration of the pcontrol_evaless in the console## Time difference of -2.454585 secsstopCluster(cl)Melt the data frame.

control_eval_long <-

control_eval %>%

bind_rows() %>%

reshape2::melt(

id.vars = c("year_var", "warning_thres"),

variable.name = "programme",

value.name = "no_of_treatments"

)

control_eval_long %>%

head() %>%

pander()| year_var | warning_thres | programme | no_of_treatments |

|---|---|---|---|

| 2007 | 1 | ir | 14 |

| 2008 | 1 | ir | 11 |

| 2009 | 1 | ir | 11 |

| 2010 | 1 | ir | 9 |

| 2011 | 1 | ir | 7 |

| 2012 | 1 | ir | 13 |

plot_models <-

control_eval_long %>%

# control_eval_long%>%

mutate(programme = ifelse(

programme == "ir",

"a) Default IR",

ifelse(

programme == "ir_opt",

"b) Optimised IR",

ifelse(

programme == "ir_low_risk",

"c) Low Risk IR",

ifelse(

programme == "w_ir",

"d) Default IR 5-10d",

ifelse(

programme == "w_ir_opt",

"e) Optimised IR 5-10d",

ifelse(programme == "w_ir_low_risk", "f) Low Risk IR 5-10d", "")

)

)

)

)

)) %>%

mutate(programme = factor(

programme,

levels = c(

"a) Default IR",

"b) Optimised IR",

"c) Low Risk IR",

"d) Default IR 5-10d",

"e) Optimised IR 5-10d",

"f) Low Risk IR 5-10d"

)

)) %>%

mutate(Years = factor(year_var)) %>%

ggplot(., aes(factor(warning_thres), no_of_treatments)) +

geom_vline(

xintercept = seq(1, 18, 1),

size = 0.2,

color = "gray",

linetype = "dotted"

) +

geom_vline(

xintercept = seq(3, 15, 3),

size = 0.2,

color = "gray",

linetype = "solid"

) +

geom_jitter(

aes(color = Years),

position = position_jitter(width = 0.1),

alpha = 0.6,

size = 1

) +

scale_colour_brewer(palette = "Set3") +

geom_line(aes(warning_thres, calendar_seven), colour = gray.colors(2)[1]) +

geom_label(

aes(14, calendar_seven + 0.8 , label = "7-day freq."),

colour = gray.colors(2)[1],

fill = NA,

label.size = NA

) +

geom_line(aes(warning_thres, calendar_five), colour = gray.colors(2)[1]) +

geom_label(

aes(14, calendar_five + 0.8, label = "5-day freq."),

colour = gray.colors(2)[1],

fill = NA,

label.size = NA

) +

geom_smooth(method = "loess",

se = FALSE,

color = gray.colors(2)[1],

aes(group = 1)) +

facet_wrap(~ programme, ncol = 3) +

# ggtitle("Cost effectiveness of model versions") +

xlab("Warning thresholds (EBH)") +

ylab("Number of treatments") +

scale_x_discrete(breaks = seq(0, 18, 3)) +

theme(legend.position = "top") +

theme_article()+

theme(text = element_text(size=18))

ggsave(plot = plot_models,

file = here::here("images", "pub","plot_models.png"),

device = "png",

width = 19,

height = 13,

units = "cm",

dpi = 500

)

plot_models

The figure shows how does the frequency of recommended treatments vary with the increasing decision thresholds of the three model versions and how does it differ from the calendar spray programmes. The dots represent the number of treatments in relation to corresponding warning threshold, where each dot represents a single year. If the fitted smoothed line is above the estimated calendar frequency line, the model recommends more treatments than the usual standard calendar programme.

There is variation in the data and it would be interesting to see if there is an effect of year.

control_eval_long %>%

mutate(programme = ifelse(

programme == "ir",

"Default IR",

ifelse(

programme == "ir_opt",

"Optimised IR",

ifelse(

programme == "ir_low_risk",

"Low Risk IR",

ifelse(

programme == "w_ir",

"Default IR 5-10d",

ifelse(

programme == "w_ir_opt",

"Optimised IR 5-10d",

ifelse(programme == "w_ir_low_risk", "Low Risk IR 5-10d", "")

)

)

)

)

)) %>%

mutate(programme = factor(

programme,

levels = c(

"Default IR",

"Optimised IR",

"Low Risk IR",

"Default IR 5-10d",

"Optimised IR 5-10d",

"Low Risk IR 5-10d"

)

)) %>%

mutate(Years = factor(year_var)) %>%

ggplot(.,

aes(

factor(warning_thres),

no_of_treatments,

group = programme,

colour = programme

)) +

geom_vline(

xintercept = seq(1, 18, 1),

size = 0.2,

color = "gray",

linetype = "dotted"

) +

geom_vline(

xintercept = seq(3, 15, 3),

size = 0.2,

color = "gray",

linetype = "solid"

) +

geom_line(aes()) +

geom_line(aes(warning_thres, calendar_seven), colour = gray.colors(2)[1]) +

geom_label(

aes(14, calendar_seven + 0.8 , label = "7-day freq."),

colour = gray.colors(2)[1],

fill = NA,

label.size = NA,

size = 3

) +

geom_line(aes(warning_thres, calendar_five), colour = gray.colors(2)[1]) +

geom_label(

aes(14, calendar_five + 0.8, label = "5-day freq."),

colour = gray.colors(2)[1],

fill = NA,

label.size = NA,

size = 3

) +

facet_wrap(~ Years, ncol = 5) +

ggtitle("Treatment Frequency as a Factor the Decision Threshold") +

theme(strip.text.y = element_blank()) +

xlab("Warning thresholds (EBH)") +

ylab("Number of treatments") +

theme_article() +

geom_vline(

xintercept = c(2, 3, 4),

size = 0.5,

color = "gray",

linetype = "dotted"

) +

scale_x_discrete(breaks = seq(0, 18, 3)) +

scale_y_continuous(breaks = seq(0, 30, 5)) +

scale_colour_brewer("Model",

palette = "Dark2") +

theme(legend.position = "top",

text = element_text(size = 14))

It is evident that setting a threshold to even a minimum accumulation of risk, in other words a single effective blight hour, is triggering less treatments in certain years.

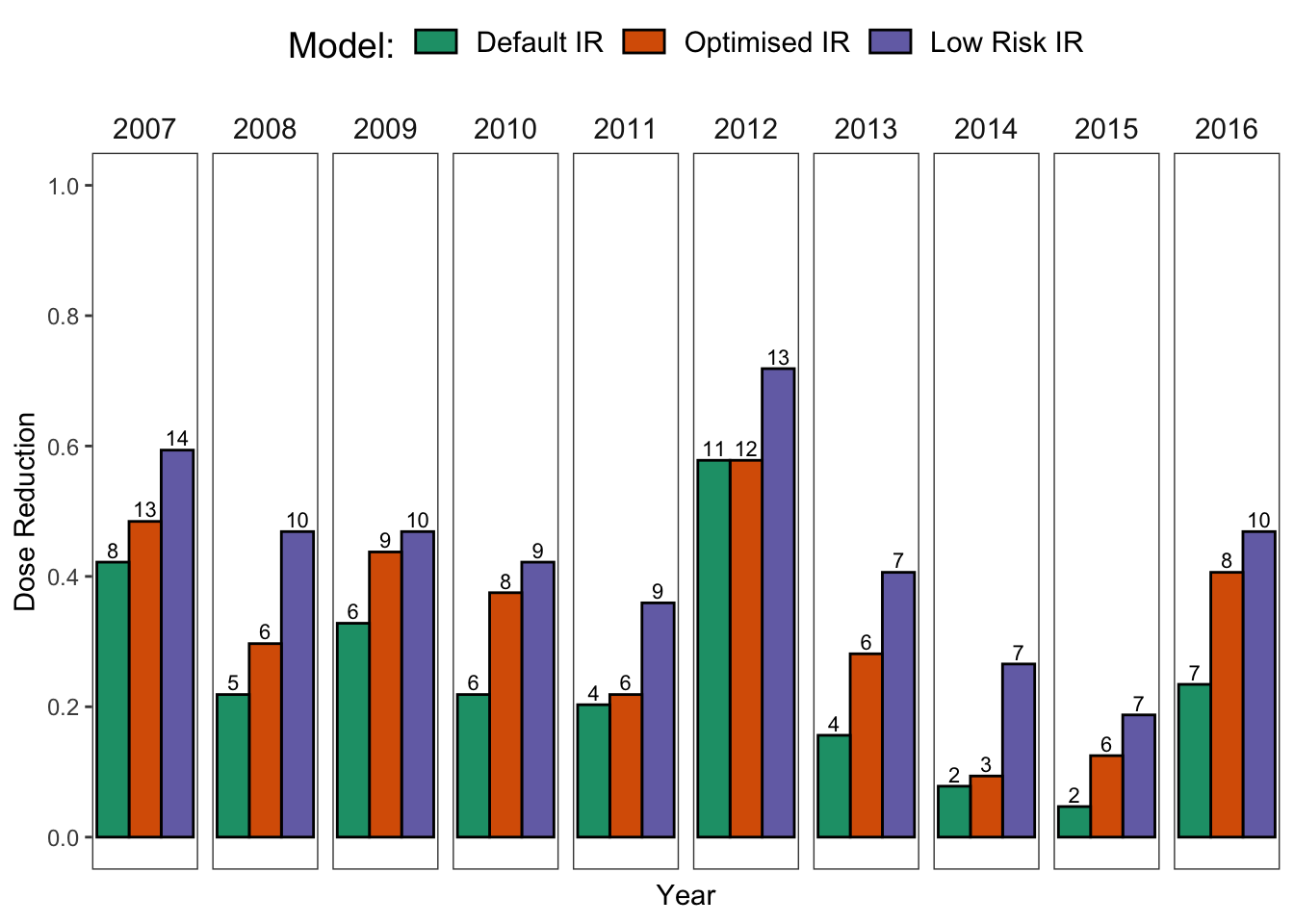

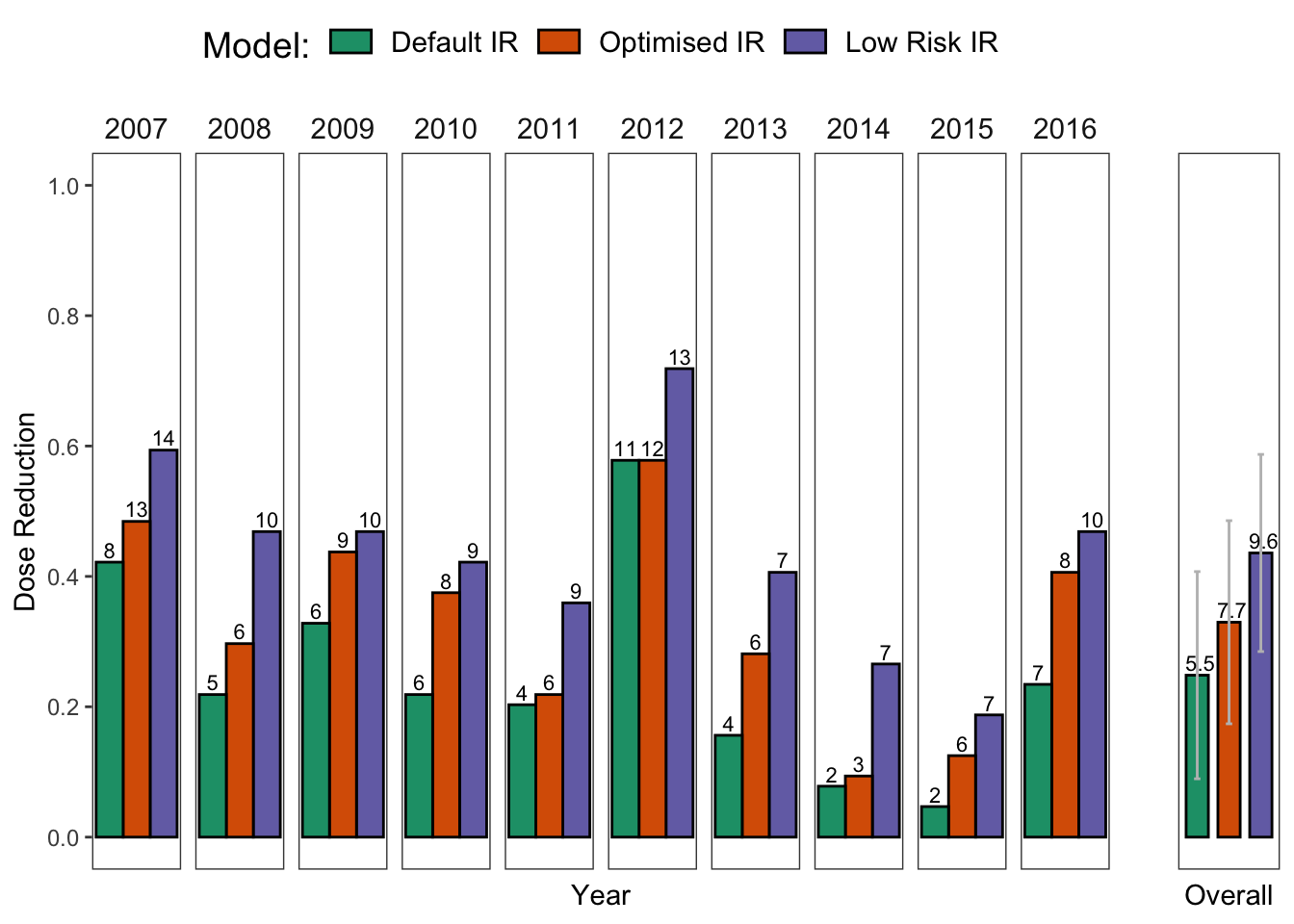

Dose Reduction for Calendar Treatment

Possible dose reductions are calculated for the usual 7 day calendar treatment.

Calculate the dose reductions based on the model outputs. Dose is reduced to quarters based on the risk output. The maximum dose is applied if the risk is over 12EBH.

test.overlap = function(vals, start_date, end_date) {

rowSums(mapply(function(a, b)

between(vals, a, b),

start_date, end_date)) > 0

}

#Dose calculation function based on the risk output

Dose <- function(x) {

ifelse(x >= 0 & x <= 3, 0,

ifelse(x > 3 & x <= 6, 0.25,

ifelse(

x > 6 & x <= 9, 0.5,

ifelse(x > 9 & x <= 12, 0.75,

ifelse(x > 12, 1, 1))

)))

}The function is applied on the data.

dosedf <-

df %>%

filter(test.overlap(short_date,

dates_cut$prot_start,

dates_cut$season_end)) %>%

dplyr::select(ends_with("year_var"),

ends_with("week_var"),

ends_with("doy"),

starts_with("ir")) %>%

group_by(year_var, week_var, doy) %>%

summarise_at(., .vars = colnames(.[grep("ir", colnames(.))]), .funs = max) %>% #get max daily values

group_by(year_var) %>%

mutate(treat_week = sort(rep(seq(1, 19, 1), 7))[1:n()]) %>%

group_by(year_var, treat_week) %>%

summarise_at(., .vars = colnames(.[grep("ir", colnames(.))]), .funs = max) %>%

mutate_at(.,

.vars = colnames(.[grep("ir", colnames(.))]),

.funs = Dose)#Get number of treatments per year and the dose reduction

Summary <- function(x) {

data.frame(

n = length(x),

treatments = sum(as.numeric(x != 0)),

dose = sum(x) / length(x)

)

}

p1 <-

dosedf %>%

dplyr::select(-("treat_week")) %>%

reshape2::melt(id = "year_var",

variable.name = "model",

value.name = "dose") %>%

mutate(model = ifelse(

model == "ir",

"Default IR",

ifelse(

model == "ir_opt",

"Optimised IR",

ifelse(model == "ir_low_risk", "Low Risk IR", "")

)

)) %>%

mutate(model = factor(model, levels = c("Default IR",

"Optimised IR",

"Low Risk IR"))) %>%

group_by(year_var, model) %>%

do(Summary(.$dose)) %>%

ggplot(aes(

x = year_var,

y = dose,

fill = model,

group = model

)) +

geom_bar(

color = "black",

stat = "identity",

position = position_dodge(),

width = 0.9

) +

geom_text(

aes(label = treatments),

vjust = -0.3,

color = "black",

position = position_dodge(0.9),

size = 2.9

) +

scale_fill_brewer("Model:", palette = "Dark2") +

scale_y_continuous(limits = c(0, 1), breaks = seq(0, 1, 0.2)) +

theme_article() +

facet_grid(~ year_var, scales = "free") +

labs(x = "Year",

y = "Dose Reduction") +

theme(

axis.title = element_text(size = 11),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

axis.text = element_text(size = 9),

legend.position = "top",

axis.text.x = element_blank(),

axis.ticks.x = element_blank()

)+

theme(text = element_text(size=14))

p1

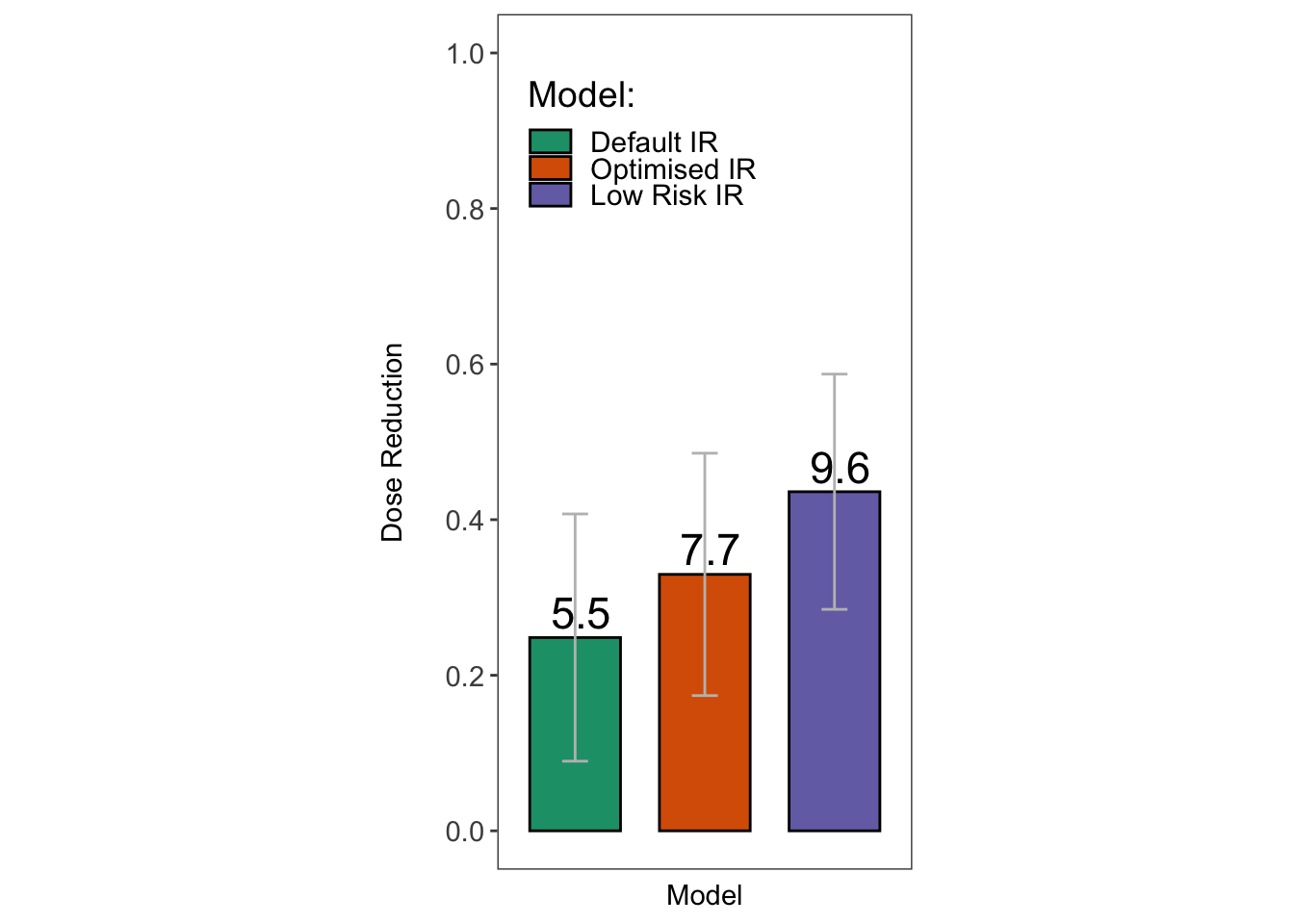

Now, let us make some summaries to see if we can reduce the number of treatments and fungicide usage based on the model outputs.

dose_summary <-

dosedf %>%

dplyr::select(-("treat_week")) %>%

reshape2::melt(id = "year_var",

variable.name = "model",

value.name = "dose") %>%

mutate(model = ifelse(

model == "ir",

"Default IR",

ifelse(

model == "ir_opt",

"Optimised IR",

ifelse(model == "ir_low_risk", "Low Risk IR", "")

)

)) %>%

mutate(model = factor(model,

levels = c("Default IR",

"Optimised IR",

"Low Risk IR"))) %>%

group_by(year_var, model) %>%

do(Summary(.$dose))

dose_summary %>%

mutate(Model = model) %>%

group_by(Model) %>%

summarise(

'Mean Treatments' = mean(treatments),

'Mean Dose' = round(mean(dose),3),

'sd' = round(sd(dose), 3),

'Min \nTreatments' = min(treatments),

'Max \nTreatments' = max(treatments),

'Min Dose' = round(min(dose),3) ,

'Max Dose' = round( max(dose),3)

) %>%

kable(format = "html") %>%

kableExtra::kable_styling( latex_options = "striped",full_width = FALSE)| Model | Mean Treatments | Mean Dose | sd | Min Treatments | Max Treatments | Min Dose | Max Dose |

|---|---|---|---|---|---|---|---|

| Default IR | 5.5 | 0.248 | 0.159 | 2 | 11 | 0.047 | 0.578 |

| Optimised IR | 7.7 | 0.330 | 0.156 | 3 | 13 | 0.094 | 0.578 |

| Low Risk IR | 9.6 | 0.436 | 0.151 | 7 | 14 | 0.188 | 0.719 |

p2 <-

dose_summary %>%

group_by(model) %>%

summarise(mean_trt = mean(treatments),

mean = mean(dose),

sd = sd(dose)) %>%

ggplot(., aes(

x = model,

y = mean,

fill = model,

group = model

)) +

geom_bar(

color = "black",

stat = "identity",

position = position_dodge(),

width = 0.7

) +

geom_errorbar(aes(ymin = mean - sd,

ymax = mean + sd),

width = 0.2,

color = "gray") +

scale_fill_brewer("Model:", palette = "Dark2") +

theme_article() +

scale_y_continuous(limits = c(0, 1), breaks = seq(0, 1, 0.2)) +

labs(x = "Model",

y = "Dose Reduction\n") +

theme(

axis.title = element_text(size = 11),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

axis.text = element_text(size = 11),

legend.position = "none",

axis.text.x = element_blank(),

axis.ticks.x = element_blank()

)

p2 +

theme(legend.position = c(0.35, 0.85))+

geom_text(

aes(label = mean_trt),

vjust = -0.3,

hjust = 0.4,

color = "black",

position = position_dodge(0.9),

size = 6

) +

coord_equal(6 / 1)+

theme(text = element_text(size=14))

p2 <-

p2+

labs(x = "Overall", y= "")+

theme(axis.text = element_blank(),

axis.ticks.y = element_blank())+

geom_text(

aes(label = mean_trt),

vjust = -0.3,

hjust = 0.4,

color = "black",

position = position_dodge(0.9),

size = 2.9

) +

coord_equal(1 / 1)

ggarrange(p1, p2, ncol = 2, widths = c(10,1))

Packages used

sessionInfo()## R version 3.6.1 (2019-07-05)

## Platform: x86_64-apple-darwin15.6.0 (64-bit)

## Running under: macOS Mojave 10.14.6

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/3.6/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/3.6/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_AU.UTF-8/en_AU.UTF-8/en_AU.UTF-8/C/en_AU.UTF-8/en_AU.UTF-8

##

## attached base packages:

## [1] parallel stats graphics grDevices utils datasets methods

## [8] base

##

## other attached packages:

## [1] pander_0.6.3 kableExtra_1.1.0 here_0.1

## [4] mgsub_1.7.1 rcompanion_2.3.0 R.utils_2.9.0

## [7] R.oo_1.22.0 R.methodsS3_1.7.1 egg_0.4.5

## [10] gridExtra_2.3 ggthemes_4.2.0 ggrepel_0.8.1

## [13] pbapply_1.4-1 remotes_2.1.0 pracma_2.2.5

## [16] devtools_2.1.0 usethis_1.5.1.9000 padr_0.5.0

## [19] knitr_1.24 lubridate_1.7.4 data.table_1.12.2

## [22] readxl_1.3.1 forcats_0.4.0 stringr_1.4.0

## [25] dplyr_0.8.3 purrr_0.3.2 readr_1.3.1

## [28] tidyr_0.8.3 tibble_2.1.3 ggplot2_3.2.1

## [31] tidyverse_1.2.1

##

## loaded via a namespace (and not attached):

## [1] TH.data_1.0-10 colorspace_1.4-1 modeltools_0.2-22

## [4] rprojroot_1.3-2 fs_1.3.1 rstudioapi_0.10

## [7] manipulate_1.0.1 mvtnorm_1.0-11 coin_1.3-1

## [10] xml2_1.2.2 codetools_0.2-16 splines_3.6.1

## [13] libcoin_1.0-5 pkgload_1.0.2 zeallot_0.1.0

## [16] jsonlite_1.6 broom_0.5.2 compiler_3.6.1

## [19] httr_1.4.1 backports_1.1.4 assertthat_0.2.1

## [22] Matrix_1.2-17 lazyeval_0.2.2 cli_1.1.0

## [25] htmltools_0.3.6 prettyunits_1.0.2 tools_3.6.1

## [28] gtable_0.3.0 glue_1.3.1 reshape2_1.4.3

## [31] Rcpp_1.0.2 cellranger_1.1.0 vctrs_0.2.0

## [34] nlme_3.1-140 lmtest_0.9-37 xfun_0.8

## [37] ps_1.3.0 testthat_2.2.1 rvest_0.3.4

## [40] MASS_7.3-51.4 zoo_1.8-6 scales_1.0.0

## [43] hms_0.5.0 sandwich_2.5-1 expm_0.999-4

## [46] RColorBrewer_1.1-2 yaml_2.2.0 memoise_1.1.0

## [49] EMT_1.1 stringi_1.4.3 highr_0.8

## [52] desc_1.2.0 nortest_1.0-4 boot_1.3-22

## [55] pkgbuild_1.0.4 rlang_0.4.0 pkgconfig_2.0.2

## [58] matrixStats_0.54.0 evaluate_0.14 lattice_0.20-38

## [61] labeling_0.3 processx_3.4.1 tidyselect_0.2.5

## [64] plyr_1.8.4 magrittr_1.5 R6_2.4.0

## [67] DescTools_0.99.28 generics_0.0.2 multcompView_0.1-7

## [70] multcomp_1.4-10 pillar_1.4.2 haven_2.1.1

## [73] foreign_0.8-71 withr_2.1.2 survival_2.44-1.1

## [76] modelr_0.1.5 crayon_1.3.4 rmarkdown_1.14

## [79] grid_3.6.1 callr_3.3.1 webshot_0.5.1

## [82] digest_0.6.20 stats4_3.6.1 munsell_0.5.0

## [85] viridisLite_0.3.0 sessioninfo_1.1.1